pacman::p_load(olsrr, corrplot, ggpubr, sf, spdep,

GWmodel, tmap, tidyverse, gtsummary,

ranger, SpatialML, janitor, stargazer,

lmtest, Metrics)Predicting HDB Public Housing Resale Prices using Geographically Weighted Methods

Take-home Exercise 3

1 Getting Started

In this Take-home Exercise 3, we will try using geographically weighted methods to predict the resale price of HDB public housing in Singapore. You will find in the next to sections a brief description of the data sets used and where to retrieve them as well as the list of all the libraries needed for this project and a description of their specific usage.

1.1 Installing and Loading Packages

For the purpose our this project, we have selected a list of libraries that will allow to perform all the necessary data cleaning, handling and analysis.

The following list shows the libraries that will be used in this assignment:

pacman, tidyverse, janitor, sf, spdep, tmap, corrplot, olsrr, knitr, httr, onemapsapi, xml2, rvest, stringr, ggmap, ggpubr, GWmodel, SpatialML, Metrics

To install and load the packages, we use the p_load() function of the pacman package. It automatically checks if packages are installed, installs them if they are not installed, and loads the packages into the R environment.

pacman::p_load(knitr, httr, onemapsgapi, xml2, rvest,

stringr, ggmap)options(digits = 15)1.2 The Data

For the purpose of this assignment, extensive amount of data was extracted from the web. You will find below a table that directs you to the webpage on which you can retrieve the data sets.

| Name | Format | Source |

|---|---|---|

| Resale Flat Prices | csv | data.gov.sg |

| Master Plan 2019 Subzone | shp | prof |

| MRT Exit Locations | shp | datamall.lta.gov.sg |

| Bus Stop Locations | shp | datamall.lta.gov.sg |

| Supermarkets | kml | data.gov.sg |

| Primary Schools | HTML scrapping | Here |

| Shopping Malls | HTML scrapping | Wikipedia |

| Rest of the data | API | OneMapAPI |

2 Retrieving and Importing the Geospatial Data

In this first section that addresses the data import, we will be going through the necessary work to load the geospatial data correctly into our R environment.

Note that in this section, we will be load data previously retrieved from the web, but also using some APIs to collect necessary variables that will play an important role in our regression models.

2.1 Master Plan 2019 with sub-zones

The first geospatial data set that we are importing is the 2019 Master Plan with sub-zones. Since it is a shp file, we use the st_read() function of the sf package to properly import the data frame.

mpsz.sf = st_read(dsn = "data/geospatial/mpsz2019",

layer = "MPSZ-2019")Reading layer `MPSZ-2019' from data source

`C:\p-haas\IS415\Take-home_Ex\Take-home_Ex03\data\geospatial\mpsz2019'

using driver `ESRI Shapefile'

Simple feature collection with 332 features and 6 fields

Geometry type: MULTIPOLYGON

Dimension: XY

Bounding box: xmin: 103.605700705134 ymin: 1.15869870063517 xmax: 104.088483065163 ymax: 1.47077483208461

Geodetic CRS: WGS 84The import was successful, however, before moving on I would like to check if all geometries are valid, and also if the projection code is correectly encoded.

any(st_is_valid(mpsz.sf) == FALSE)[1] TRUEIt seems like we have invalid geometries. Now, let’s check the CRS code of the data frame.

st_crs(mpsz.sf)Coordinate Reference System:

User input: WGS 84

wkt:

GEOGCRS["WGS 84",

DATUM["World Geodetic System 1984",

ELLIPSOID["WGS 84",6378137,298.257223563,

LENGTHUNIT["metre",1]]],

PRIMEM["Greenwich",0,

ANGLEUNIT["degree",0.0174532925199433]],

CS[ellipsoidal,2],

AXIS["latitude",north,

ORDER[1],

ANGLEUNIT["degree",0.0174532925199433]],

AXIS["longitude",east,

ORDER[2],

ANGLEUNIT["degree",0.0174532925199433]],

ID["EPSG",4326]]It looks like the EPSG and coordinate system does not correspond to the Singaporean SVY21. So, we will change that. We pipe the st_transform() and st_make_valid() functions to solve the two issues we had just pointed out.

mpsz.sf = mpsz.sf %>%

st_make_valid() %>%

st_transform(3414)any(st_is_valid(mpsz.sf) == FALSE)[1] FALSEst_crs(mpsz.sf)Coordinate Reference System:

User input: EPSG:3414

wkt:

PROJCRS["SVY21 / Singapore TM",

BASEGEOGCRS["SVY21",

DATUM["SVY21",

ELLIPSOID["WGS 84",6378137,298.257223563,

LENGTHUNIT["metre",1]]],

PRIMEM["Greenwich",0,

ANGLEUNIT["degree",0.0174532925199433]],

ID["EPSG",4757]],

CONVERSION["Singapore Transverse Mercator",

METHOD["Transverse Mercator",

ID["EPSG",9807]],

PARAMETER["Latitude of natural origin",1.36666666666667,

ANGLEUNIT["degree",0.0174532925199433],

ID["EPSG",8801]],

PARAMETER["Longitude of natural origin",103.833333333333,

ANGLEUNIT["degree",0.0174532925199433],

ID["EPSG",8802]],

PARAMETER["Scale factor at natural origin",1,

SCALEUNIT["unity",1],

ID["EPSG",8805]],

PARAMETER["False easting",28001.642,

LENGTHUNIT["metre",1],

ID["EPSG",8806]],

PARAMETER["False northing",38744.572,

LENGTHUNIT["metre",1],

ID["EPSG",8807]]],

CS[Cartesian,2],

AXIS["northing (N)",north,

ORDER[1],

LENGTHUNIT["metre",1]],

AXIS["easting (E)",east,

ORDER[2],

LENGTHUNIT["metre",1]],

USAGE[

SCOPE["Cadastre, engineering survey, topographic mapping."],

AREA["Singapore - onshore and offshore."],

BBOX[1.13,103.59,1.47,104.07]],

ID["EPSG",3414]]The two issues seem to be solved. Note that I show you how to proceed when importing geospatial data here but will not do so in such an extensive manner later. My goal here is to show you how to replicate my way of proceeding when handling the data for the first time. To make the reading easier, I have cut out all my exploration of this webpage and left only the essential and necessary code.

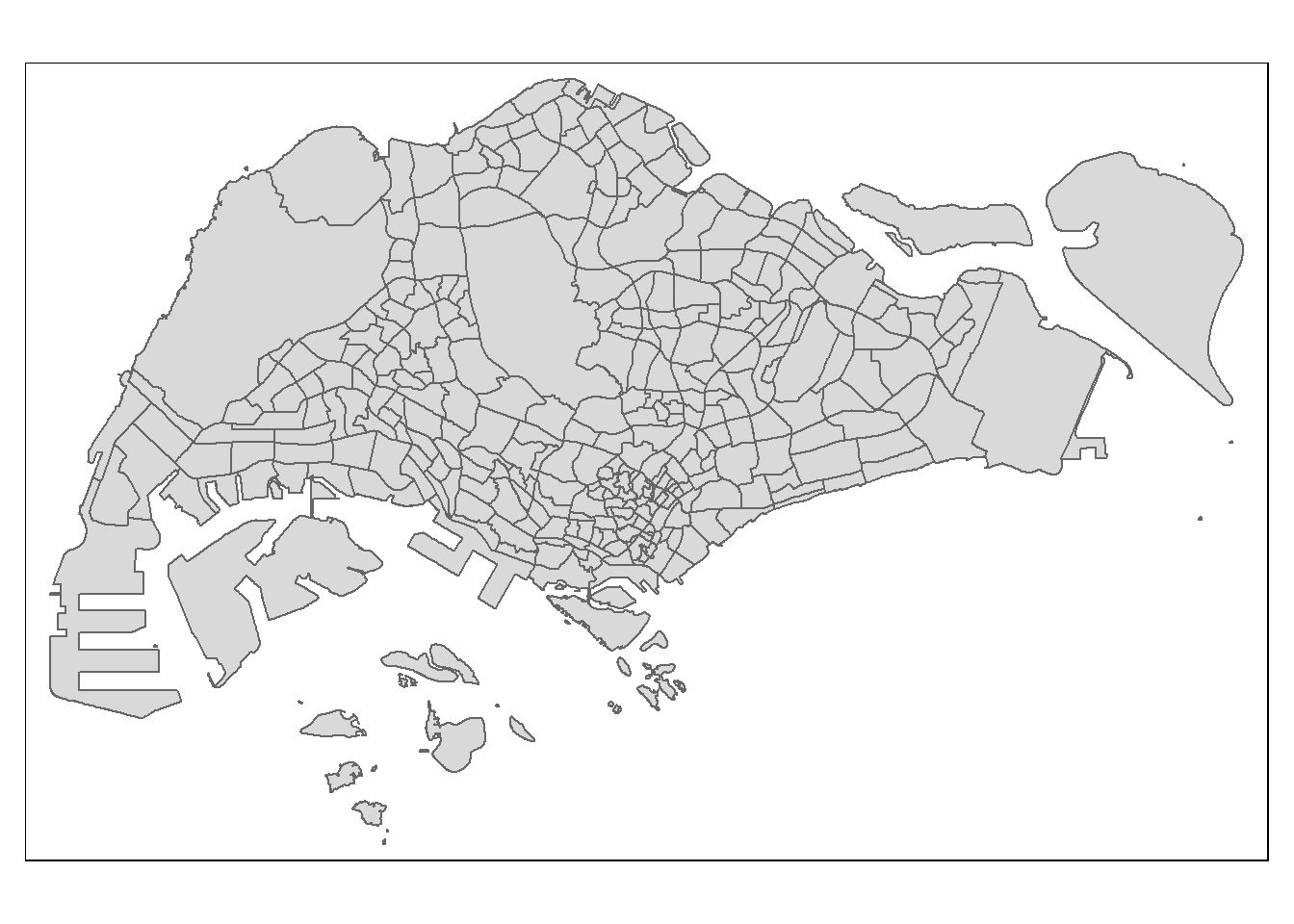

Using the qtm() function of the tmap package, we can make a quick visualization of the geometry stored in the sf data frame.

qtm(mpsz.sf)

2.2 MRT Train stations

Importing MRT Exits into an sf data frame.

As explained just before, I will be sticking with the necessary code only from now on. Using the following code chunk, we import the MRT Train Stations into an sf data frame.

mrt_exits.sf = st_read(dsn = "data/geospatial/TrainStationExit/TrainStationExit",

layer = "Train_Station_Exit_Layer") %>%

st_transform(crs=3414)Reading layer `Train_Station_Exit_Layer' from data source

`C:\p-haas\IS415\Take-home_Ex\Take-home_Ex03\data\geospatial\TrainStationExit\TrainStationExit'

using driver `ESRI Shapefile'

Simple feature collection with 562 features and 2 fields

Geometry type: POINT

Dimension: XY

Bounding box: xmin: 6134.08550000004 ymin: 27499.6967999991 xmax: 45356.3619999997 ymax: 47865.9227000009

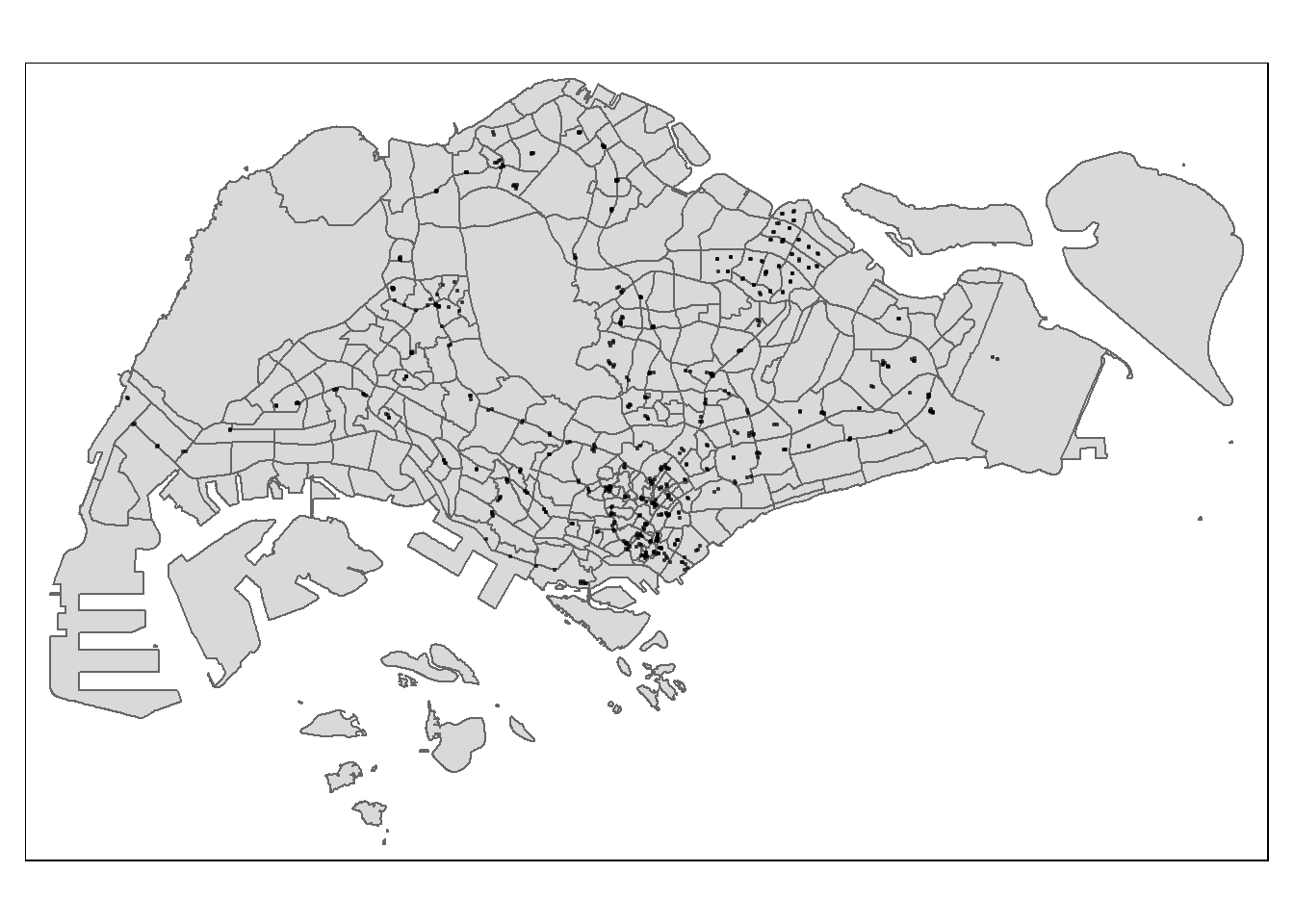

Projected CRS: SVY21Using the tmap package, we can conveniently plot the MRT Exits on the Singaporean map.

tmap_mode("plot")

tm_shape(mpsz.sf)+

tm_polygons()+

tm_shape(mrt_exits.sf)+

tm_dots(alpha = 0.5)

2.3 Bus Stop Locations

Importing Bus Stops into an sf data frame.

bus_stops.sf = st_read(dsn = "data/geospatial/BusStopLocation/BusStop_Feb2023",

layer = "BusStop") %>%

st_transform(3414)Reading layer `BusStop' from data source

`C:\p-haas\IS415\Take-home_Ex\Take-home_Ex03\data\geospatial\BusStopLocation\BusStop_Feb2023'

using driver `ESRI Shapefile'

Simple feature collection with 5159 features and 3 fields

Geometry type: POINT

Dimension: XY

Bounding box: xmin: 3970.12161372974 ymin: 26482.1007183008 xmax: 48284.5630137296 ymax: 52983.8165183011

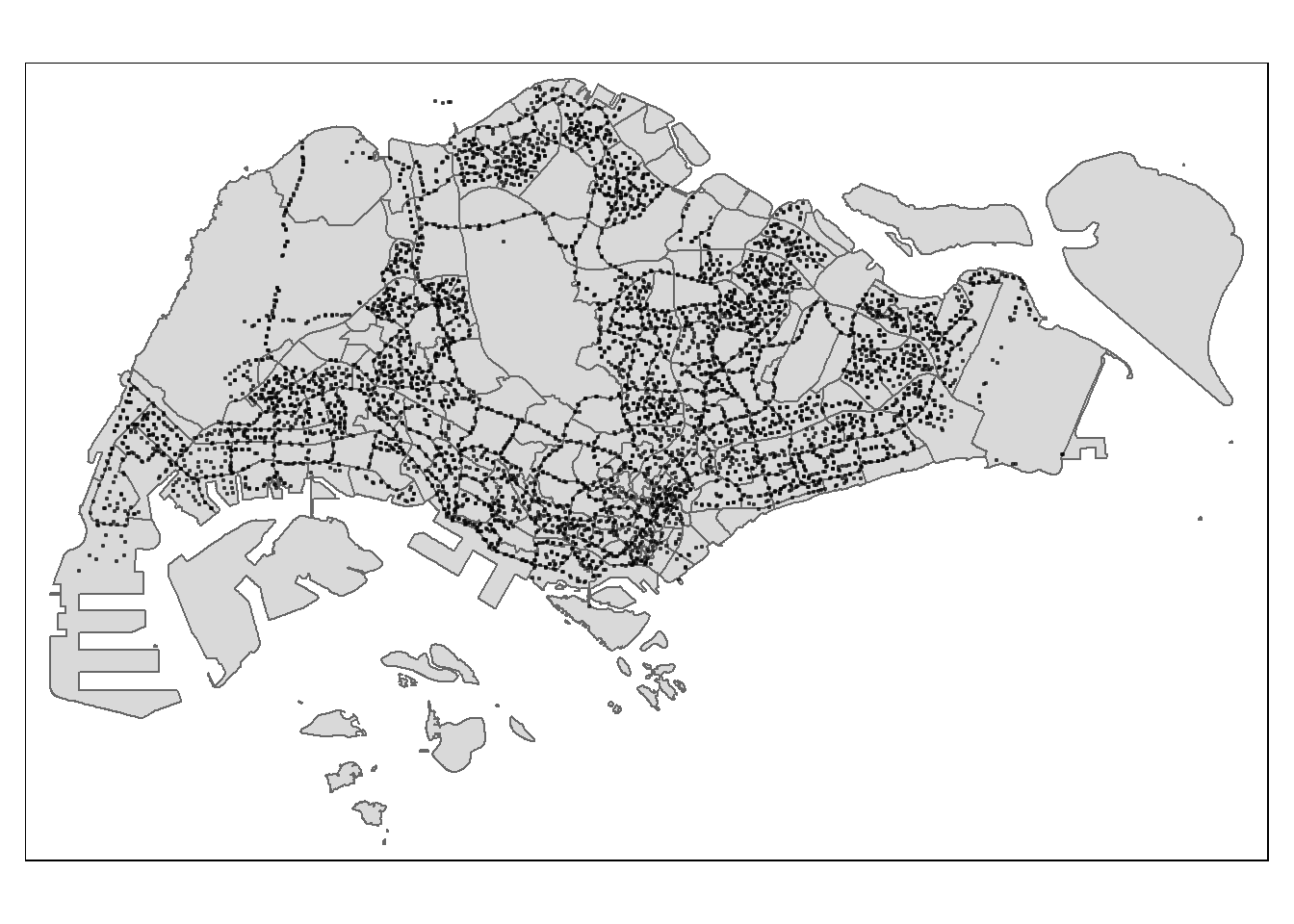

Projected CRS: SVY21tmap_mode("plot")

tm_shape(mpsz.sf)+

tm_polygons()+

tm_shape(bus_stops.sf)+

tm_dots(alpha = 0.5)

2.4 Supermarket data

Importing Supermarket Locations into an sf data frame.

supermarkets.sf = st_read("data/geospatial/supermarkets/supermarkets-kml.kml") %>%

st_transform(3414)Reading layer `SUPERMARKETS' from data source

`C:\p-haas\IS415\Take-home_Ex\Take-home_Ex03\data\geospatial\supermarkets\supermarkets-kml.kml'

using driver `KML'

Simple feature collection with 526 features and 2 fields

Geometry type: POINT

Dimension: XYZ

Bounding box: xmin: 103.625764663231 ymin: 1.2471504063107 xmax: 104.003578458884 ymax: 1.46152554782754

z_range: zmin: 0 zmax: 0

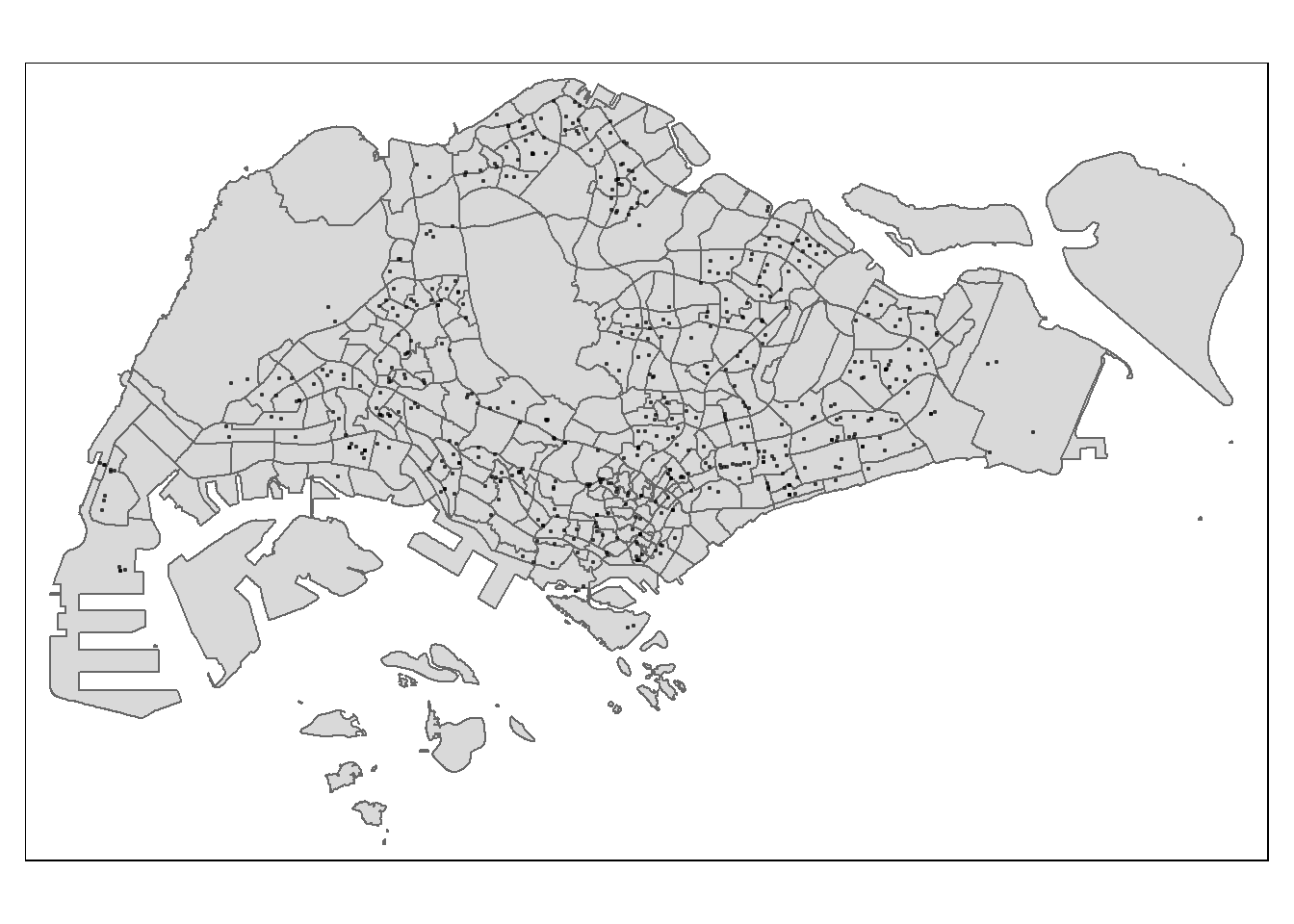

Geodetic CRS: WGS 84tmap_mode("plot")

tm_shape(mpsz.sf)+

tm_polygons()+

tm_shape(supermarkets.sf)+

tm_dots(alpha = 0.5)

2.5 Retrieving the independent variables using the OneMap API

We will be using the One Map Singapore API to retrieve some of the independent variables that we will be using later on in our regression model.

We will be taking a few preliminary steps before extracting the variables. You will find everything you need to replicate my work in the following sections.

2.5.1 Retrieving the API token using the onemapsgapi package

The first step to getting the API key is to create an account on the One Map website (click here to access the registration tutorial).

Now that you are registered, we may retrieve the API key using the onemapsgapi package and its get_token() function. By simply entering your registration email and password, you will get a valid API key for a three-day period.

Note that if you want to learn more about the One Map API, you should definitely check their website and their web app that allows to test the API’s services.

token_api = get_token(email = "xxx@xxx.xxx",

password = "...")2.5.2 Using the API to retrieve the variables available on OneMap

The first step to retrieving our independent variables is to look at the available data provided by One Map. We do so using the httr package that allows us to make HTML queries. Using the GET() function, we retrieve every theme info of the OneMap API.

url = "https://developers.onemap.sg/privateapi/themesvc/getAllThemesInfo"

query <- list("token" = token_api)

result <- GET(url, query = query)Using the content() function, we may look at the information retrieved, however, you should be made aware that the result from our GET() command is quite messy. So, you will see in the next section how we extract the theme names and store them into a list.

content(result)2.5.3 Creating the variable list

As explained, the information retrieved is quite messy. So, we will be storing in a list the essential information that we will use later on.

Using a for loop, I store the theme specific information into a list called themes_list.

themes_list = list()

for (i in 1:length(content(result)$Theme_Names)){

themes_list = append(themes_list, content(result)$Theme_Names[[i]])

}To take a look at the information stored into our list, you may use the next code chunk. However, since the list is quite long, I won’t print the output/information here and will directly give you a list you the variables I have extracted.

themes_listIn the next code chunk, you may see the variables that I carefully selected. They give information about: Eldercare Services, Hawker Centres, Food Courts, Parks in Singapore, Kindergartens, and Childcare Centres.

ind_variables = c("eldercare", "hawkercentre", "hawkercentre_new", "healthier_hawker_centres", "maxwell_fnb_map", "healthiercaterers", "relaxsg", "nationalparks", "kindergartens", "childcare")I have also picked-up some other interesting variables that may be included later in my regression model to improve the quality of the future model.

extra_variables = c("lta_cycling_paths", "disability", "hsgb_fcc", "sportsg_sport_facilities", "ssc_sports_facilities", "wastedisposalsite", "drainageconstruction", "cpe_pei_premises", "sewerageconstruction", "danger_areas", "aquaticsg", "moh_isp_clinics", "heritagetrees", "nparks_activity_area", "exercisefacilities", "mha_uav_2015", "playsg", "underconstruction_facilities", "preschools_location", "hdbcyclingunderconstruction", "boundary_5km", "hdbluppropunderconstruction", "parkingstandardszone", "libraries", "cyclingpath", "dengue_cluster", "greenbuilding", "nparks_uc_parks", )You won’t see these additional variables in my model due to the computing power of my computer. However, I strongly recommend to try an include these potential independent variables to improve the model.

2.5.4 Retrieving the available variables with the OneMap API

Using the code chunk below, you can retrieve the location name and its coordinates based on the previously created list of variables. We store this information into a data frame to make our use of this data easier

df = data.frame()

url = "https://developers.onemap.sg/privateapi/themesvc/retrieveTheme"

for (x in ind_variables){

query <- list("queryName" = x, "token" = token_api)

result <- GET(url, query = query)

print("Start")

print(x)

for (i in 2:length(content(result)$SrchResults)){

new_row = c(content(result)$SrchResults[[i]]$NAME,

str_split_fixed(content(result)$SrchResults[[i]]$LatLng, ",", 2)[1],

str_split_fixed(content(result)$SrchResults[[i]]$LatLng, ",", 2)[2],

content(result)$SrchResults[[1]]$Theme_Name)

df = rbind(df, new_row)

}

}

colnames(df)<-c("location_name", "lat", "lon", "variable_name")Note that retrieving the data takes a few minutes, so I took care of exporting the data frame into a csv file to avoid running the previous code chunk multiple times.

write_csv(df, "data/geospatial/retrieved_variables.csv")We now have part of our independent variables stored into a csv file that we will import back into our R environment.

retrieved_variables = read_csv("data/geospatial/retrieved_variables.csv", )If we look back to the variables we chose to retrieve using the One Map API, we had sub-categories to our independent variables.

unique(retrieved_variables$variable_name)[1] "eldercare" "hawker_centres" "parks" "kindergartens"

[5] "child_care" For instance, we have four sub-categories for hawker centres and food courts. We shall simplify our work by renaming these sub-categories under one name only. Using the next code chunk, we may do so.

retrieved_variables$variable_name = str_replace(retrieved_variables$variable_name,

"Eldercare Services",

"eldercare")

retrieved_variables$variable_name = str_replace(retrieved_variables$variable_name,

"^Hawker Centres$",

"hawker_centres")

retrieved_variables$variable_name = str_replace(retrieved_variables$variable_name,

"Hawker Centres_New",

"hawker_centres")

retrieved_variables$variable_name = str_replace(retrieved_variables$variable_name,

"Healthier Hawker Centres",

"hawker_centres")

retrieved_variables$variable_name = str_replace(retrieved_variables$variable_name,

"Maxwell Chambers F&B map",

"hawker_centres")

retrieved_variables$variable_name = str_replace(retrieved_variables$variable_name,

"Healthier Caterers",

"hawker_centres")

retrieved_variables$variable_name = str_replace(retrieved_variables$variable_name,

"Parks@SG",

"parks")

retrieved_variables$variable_name = str_replace(retrieved_variables$variable_name,

"Parks",

"parks")

retrieved_variables$variable_name = str_replace(retrieved_variables$variable_name,

"Kindergartens",

"kindergartens")

retrieved_variables$variable_name = str_replace(retrieved_variables$variable_name,

"Child Care Services",

"child_care")unique(retrieved_variables$variable_name)[1] "eldercare" "hawker_centres" "parks" "kindergartens"

[5] "child_care" We shall now proceed to creating sf objects from the extracted coordinates.

2.5.5 Transforming the data frame to sf data frame

Using the st_as_sf() function from the sf package, we can transform our set of coordinates into sf point objects.

retrieved_variables.sf = st_as_sf(retrieved_variables,

coords = c("lon", "lat"),

crs = 4326) %>%

st_transform(3414)We shall check if our data points have been correctly transformed by plotting the points on the Singapore map using the tmap package.

tmap_mode("plot")

tm_shape(mpsz.sf)+

tm_polygons()+

tm_shape(retrieved_variables.sf)+

tm_dots()

3 Retrieving and Importing the Aspatial Data

3.1 Resale Price of HDB flats

After retrieving the resale HDB prices from the data.gov.sg website, I moved the folder into my data folder, it contains all the data sets necessary for our analysis, and more precisely stored it into the aspatial folder.

The downloaded data is stored in a folder called resale-flat-prices, itself containing multiple files. To choose the right file, we can use the base r function list.files() to return a list of the file’s names.

path = "data/aspatial/resale-flat-prices"

files = list.files(path)

files[1] "metadata-resale-flat-prices.txt"

[2] "resale-flat-prices-based-on-approval-date-1990-1999.csv"

[3] "resale-flat-prices-based-on-approval-date-2000-feb-2012.csv"

[4] "resale-flat-prices-based-on-registration-date-from-jan-2015-to-dec-2016.csv"

[5] "resale-flat-prices-based-on-registration-date-from-jan-2017-onwards.csv"

[6] "resale-flat-prices-based-on-registration-date-from-mar-2012-to-dec-2014.csv"Looking at the above list, it seems clear that we should use file n°5 as it contains the data from 2017 and later. It gives us the intuition that we may need to treat the data and take a subset of data rows within the analysis period (2021 to 2023). We will import the data using the read_csv() function from the readr package.

resale_price = read_csv(file = paste(path, files[5], sep = "/"))We should take a quick look at the fields contained in the resale_price data frame. We do so using the glimpse() function.

glimpse(resale_price)Rows: 148,096

Columns: 11

$ month <chr> "2017-01", "2017-01", "2017-01", "2017-01", "2017-…

$ town <chr> "ANG MO KIO", "ANG MO KIO", "ANG MO KIO", "ANG MO …

$ flat_type <chr> "2 ROOM", "3 ROOM", "3 ROOM", "3 ROOM", "3 ROOM", …

$ block <chr> "406", "108", "602", "465", "601", "150", "447", "…

$ street_name <chr> "ANG MO KIO AVE 10", "ANG MO KIO AVE 4", "ANG MO K…

$ storey_range <chr> "10 TO 12", "01 TO 03", "01 TO 03", "04 TO 06", "0…

$ floor_area_sqm <dbl> 44, 67, 67, 68, 67, 68, 68, 67, 68, 67, 68, 67, 67…

$ flat_model <chr> "Improved", "New Generation", "New Generation", "N…

$ lease_commence_date <dbl> 1979, 1978, 1980, 1980, 1980, 1981, 1979, 1976, 19…

$ remaining_lease <chr> "61 years 04 months", "60 years 07 months", "62 ye…

$ resale_price <dbl> 232000, 250000, 262000, 265000, 265000, 275000, 28…The available fields are as follows:

- month: it indicates the month of transaction

- town: it indicates the district

- flat_type: it indicates the number of rooms in the flat

- block: it indicates the block number of the HDB flat

- street_name: it indicates the street number and name

- storey_range: it indicates the floor number of the flat

- floor_area_sqm: it indicates the floor area of the flat in square meter

- flat_model: it indicates the HDB flat model

- lease_commence_date: it indicates the starting year date of the lease (in Singapore, HDB flats are 99-year leaseholds)

- remaining_lease: it indicates the remaining years and months on the 99-year leasehold

- resale_price: it indicates the resale price of a given flat in SGD (Singapore dollars)

Now, we will use the head() function to look at the available data in each field.

head(resale_price)# A tibble: 6 × 11

month town flat_…¹ block stree…² store…³ floor…⁴ flat_…⁵ lease…⁶ remai…⁷

<chr> <chr> <chr> <chr> <chr> <chr> <dbl> <chr> <dbl> <chr>

1 2017-01 ANG MO … 2 ROOM 406 ANG MO… 10 TO … 44 Improv… 1979 61 yea…

2 2017-01 ANG MO … 3 ROOM 108 ANG MO… 01 TO … 67 New Ge… 1978 60 yea…

3 2017-01 ANG MO … 3 ROOM 602 ANG MO… 01 TO … 67 New Ge… 1980 62 yea…

4 2017-01 ANG MO … 3 ROOM 465 ANG MO… 04 TO … 68 New Ge… 1980 62 yea…

5 2017-01 ANG MO … 3 ROOM 601 ANG MO… 01 TO … 67 New Ge… 1980 62 yea…

6 2017-01 ANG MO … 3 ROOM 150 ANG MO… 01 TO … 68 New Ge… 1981 63 yea…

# … with 1 more variable: resale_price <dbl>, and abbreviated variable names

# ¹flat_type, ²street_name, ³storey_range, ⁴floor_area_sqm, ⁵flat_model,

# ⁶lease_commence_date, ⁷remaining_lease3.1.1 Selecting trasactions of 3 Room apartments from 2021 onwards

My previously mentioned intuition is confirmed. The month variable takes data starting in January 2017 and, through quick data manipulation, we will have to restrain the data frames to the 2021-2022 analysis period and 2023 data testing period. In addition, it looks like the town field in unnecessary and may be dropped. We only need the block and steet_name fields to geocode the addresses and create sf geometry.

Here we pipe the select() function to remove the town variable, filter and grepl functions to create a subset of the data by only selecting transactions that took place in 2021 to 2023, and finally pipe once again the filter function to select only three room HDB flats.

rp_3rooms = resale_price %>%

select(-2) %>%

filter(grepl("202[123]", month)) %>%

filter(flat_type == "3 ROOM")3.1.2 Transforming the storey_range column to dummy variables

Since we would like to include the storey_range variable in our analysis and the column only takes categorical data, we shall create dummy variables to indicate the storey range of each HDB flat.

The first step is to look at what are the unique values in the column. Using the unique() function, we retrieve in the form of a list the unique categorical values.

unique(rp_3rooms$storey_range) [1] "04 TO 06" "01 TO 03" "07 TO 09" "10 TO 12" "13 TO 15" "16 TO 18"

[7] "19 TO 21" "25 TO 27" "22 TO 24" "37 TO 39" "31 TO 33" "34 TO 36"

[13] "40 TO 42" "46 TO 48" "28 TO 30" "43 TO 45"Now, using a for loop and the ifelse() function, we will create a new column for each unique categorical variable contained in the storey_range field and assign a 1 if the particular HDB flat belongs to the specific storey range.

for (i in unique(rp_3rooms$storey_range)){

rp_3rooms[i] = ifelse(rp_3rooms$storey_range == i, 1, 0)

}3.1.3 Transforming the flat_model column to dummy variables

We repeat the same process with the flat_model variable.

unique(rp_3rooms$flat_model)[1] "New Generation" "Improved" "Model A"

[4] "Simplified" "Standard" "Premium Apartment"

[7] "DBSS" "Terrace" for (i in unique(rp_3rooms$flat_model)){

rp_3rooms[i] = ifelse(rp_3rooms$flat_model == i, 1, 0)

}3.1.4 Transforming the remaining_lease to a numerical variable

If you remember previously when we took a look at the different data fields available in our data frame, you could notice that the remaining_lease variable stored character data, however, we would like it to be a numerical data field.

To transform our column into a numerical field, we use a for loop with if/else statements. This will allow us to extract the number of years and months remaining on the lease and create a variable that stores the years remaining before expiration of the lease.

lease_remaining = list()

for (i in 1:nrow(rp_3rooms)){

lease = str_extract_all(rp_3rooms$remaining_lease, "[0-9]+")[[i]]

year = as.numeric(lease[1])

if (length(lease) < 2){

lease_remaining = append(lease_remaining, year)

} else {

month = as.numeric(lease[2])

lease_remaining = append(lease_remaining, round(year+month/12, 2))

}

}rp_3rooms$remaining_lease = as.numeric(lease_remaining)3.1.5 Geocoding the address

The first step to geocoding the address - retrieving the latitude and longitude based in the address - is to create a new column that stores the cleaned full address. Consequently, we create a new field called cleaned_address that combines both the block and street_name fields. This will allow us to retrieve the geocode of the HDB flats in our query.

rp_3rooms["cleaned_address"] = paste(rp_3rooms$block, rp_3rooms$street_name, sep = " ")Now that we have a column that stores the cleaned addresses, we can move on to the geocoding. By using the httr package and One Map API, we will create GET requests that retrieve the full address of the HDB flat, its longitude and latitude. We will store all this data into three lists to later create three additional columns in our data frame.

url = "https://developers.onemap.sg/commonapi/search"

full_address = list()

latitude = list()

longitude = list()

for (address in rp_3rooms$cleaned_address){

query <- list("searchVal" = address,

"returnGeom" = "Y", "getAddrDetails" = "Y")

res <- GET(url, query = query)

full_address = append(full_address, content(res)$results[[1]]$ADDRESS)

latitude = append(latitude, content(res)$results[[1]]$LATITUDE)

longitude = append(longitude, content(res)$results[[1]]$LONGITUDE)

}rp_3rooms$full_address = full_address

rp_3rooms$lat = latitude

rp_3rooms$lon = longitudeBefore exporting the data frame to a csv file (to avoid repeating this lengthy step), we shall use the apply function to transform our three newly created columns to the right type (character / numerical type).

options(digits=15)

rp_3rooms$full_address = apply(rp_3rooms[, 28], 2, as.character)

rp_3rooms$lat = apply(rp_3rooms[, 29], 2, as.numeric)

rp_3rooms$lon = apply(rp_3rooms[, 30], 2, as.numeric)write.csv(rp_3rooms, "data/aspatial/geocoded_resale_price.csv", row.names = FALSE)We import the geocoded data back into the R environment.

rp_3rooms = read_csv("data/aspatial/geocoded_resale_price.csv")3.1.6 Dropping the irrelevant variables

head(rp_3rooms)# A tibble: 6 × 38

month flat_t…¹ block stree…² store…³ floor…⁴ flat_…⁵ lease…⁶ remai…⁷ resal…⁸

<chr> <chr> <chr> <chr> <chr> <dbl> <chr> <dbl> <dbl> <dbl>

1 2021-01 3 ROOM 331 ANG MO… 04 TO … 68 New Ge… 1981 59 260000

2 2021-01 3 ROOM 534 ANG MO… 04 TO … 68 New Ge… 1980 58.2 265000

3 2021-01 3 ROOM 561 ANG MO… 01 TO … 68 New Ge… 1980 58.1 265000

4 2021-01 3 ROOM 170 ANG MO… 07 TO … 60 Improv… 1986 64.2 268000

5 2021-01 3 ROOM 463 ANG MO… 04 TO … 68 New Ge… 1980 58.2 268000

6 2021-01 3 ROOM 542 ANG MO… 04 TO … 68 New Ge… 1981 59.1 270000

# … with 28 more variables: `04 TO 06` <dbl>, `01 TO 03` <dbl>,

# `07 TO 09` <dbl>, `10 TO 12` <dbl>, `13 TO 15` <dbl>, `16 TO 18` <dbl>,

# `19 TO 21` <dbl>, `25 TO 27` <dbl>, `22 TO 24` <dbl>, `37 TO 39` <dbl>,

# `31 TO 33` <dbl>, `34 TO 36` <dbl>, `40 TO 42` <dbl>, `46 TO 48` <dbl>,

# `28 TO 30` <dbl>, `43 TO 45` <dbl>, cleaned_address <chr>,

# full_address <chr>, lat <dbl>, lon <dbl>, `New Generation` <dbl>,

# Improved <dbl>, `Model A` <dbl>, Simplified <dbl>, Standard <dbl>, …We will drop the following irrelevant fields.

rp_3rooms.cleaned = rp_3rooms %>%

select(-c("flat_type", "block", "street_name", "storey_range", "flat_model", "cleaned_address", "full_address"))3.1.7 Creating the sf geometry

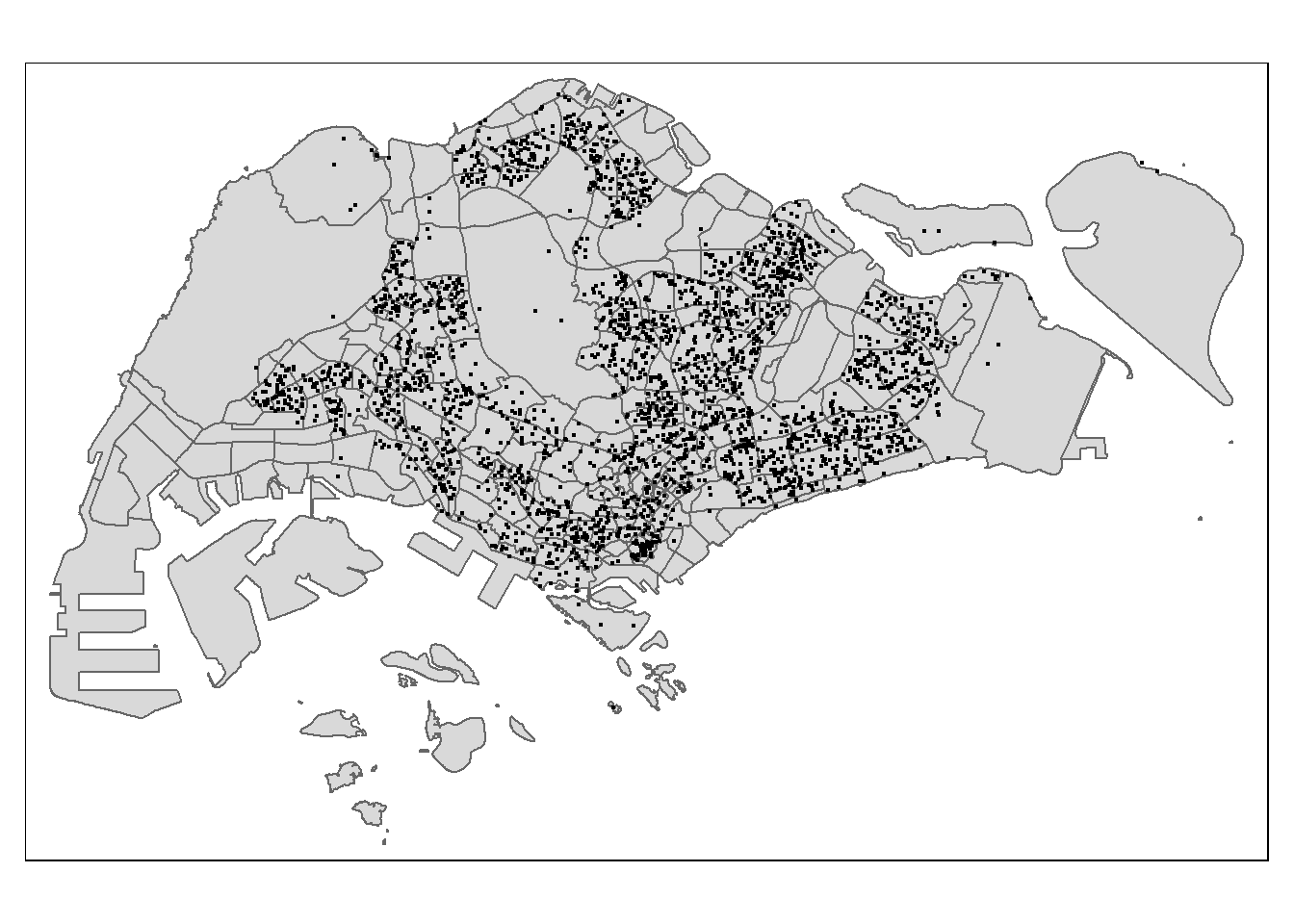

Using the tibble data frame and lon/lat fields, we create a sf data frame and visualize the data with the tmap package.

rp_3rooms.sf = st_as_sf(rp_3rooms.cleaned,

coords = c("lon", "lat"),

crs = 4326) %>%

st_transform(3414)tmap_mode("plot")

tm_shape(mpsz.sf)+

tm_polygons()+

tm_shape(rp_3rooms.sf)+

tm_dots()

3.2 Primary School data

After thoroughly looking on the web, I was not able to find a list of primary schools of Singapore to download. So, to improve the reproducibility of my work, I scrapped the sgschooling website to retrieve what seemed to me like an up-to-date list of primary schools.

3.2.1 Scrapping Primary Schools online with rvest package

The first step is to retrieve the names of the primary schools. Looking at the code chunk below, I used the httr, xml2, and rvest packages to create a GET request, read the HTML code of the website, and extract the names of the schools to store them into a list.

url = "https://sgschooling.com/school/"

res = GET(url)

wiki_read <- xml2::read_html(res, encoding = "UTF-8")

testt = wiki_read %>%

html_elements("a")

testt1 = testt[grepl("school/", testt)]

pattern <- ">(.*)<"

result <- regmatches(testt1, regexec(pattern, testt1))

schools = list()

for (i in 1:length(result)){

new_row = result[[i]][2]

schools = append(schools, new_row)

}3.2.2 Using the OneMap API to retrieve the schools’ coordinates

I then used this list and the One Map API to geocode these schools and retrieve their address as well as their longitude and latitude, and then stored all of it into a data frame.

Note that in my code I include an if/else statement in the case where we do not get a perfect match and are not able to retrieve information with the API.

primary_sc = data.frame()

url = "https://developers.onemap.sg/commonapi/search"

for (i in 1:length(schools)){

query <- list("searchVal" = schools[[i]],

"returnGeom" = "Y",

"getAddrDetails" = "Y")

result <- GET(url, query = query)

if (length(content(result)$results) == 0){

new_row = c(schools[[i]],

NA,

NA,

NA)

primary_sc = rbind(primary_sc, new_row)

} else{

new_row = c(schools[[i]],

content(result)$results[[1]]$ADDRESS,

content(result)$results[[1]]$LATITUDE,

content(result)$results[[1]]$LONGITUDE)

primary_sc = rbind(primary_sc, new_row)

}

}

colnames(primary_sc) = c("location_name", "full_address", "lat", "lon")3.2.3 Checking for missing information

sum(is.na(primary_sc$full_address))It seems like we have missing data for 9 primary schools. It is probably because the name of the school is not recognized by the API, so we will try to clean them to get their information into our data frame.

primary_sc %>%

filter(is.na(primary_sc$full_address) == TRUE) %>%

select(1)By checking on the web, it seems like the “Juying Primary School” has merged with the “Pioneer Primary School”, so we may drop the “Juying Primary School” from the data frame.

primary_sc1 = primary_sc %>%

filter(!grepl("Juying Primary School", location_name))Now, regarding the remaining schools, after performing a tests, it seems like I am not able to retrieve the information using the API so we will be filling the values with, first, the Google Maps API and then, if there are still some empty information, I will be filling the values manually by looking online.

You may want to check the next two code chunks I have used to try and retrieve the missing information using the OneMap API.

primary_sc1$location_name = str_replace(primary_sc1$location_name,

"St. ", "")

# I have also tried replacing the string with: "Saint" and "St" but I am not able to make the API retrieve the dataurl = "https://developers.onemap.sg/commonapi/search"

for (i in 1:nrow(primary_sc1)){

if (is.na(primary_sc1[i, 2])){

query <- list("searchVal" = primary_sc1[i, 1],

"returnGeom" = "Y",

"getAddrDetails" = "Y")

result <- GET(url, query = query)

if (length(content(result)$results) == 0){

next

} else{

primary_sc1[i, 2] = content(result)$results[[1]]$ADDRESS

primary_sc1[i, 3] = content(result)$results[[1]]$LATITUDE

primary_sc1[i, 4] = content(result)$results[[1]]$LONGITUDE

}} else{ next

}

}These are the schools with the missing information.

primary_sc1 %>%

filter(is.na(primary_sc1$full_address) == TRUE) %>%

select(1)Using the Google Maps API and its library ggmap, we will loop through the schools with missing coordinates. Since all these schools are located consecutively in the data frame, I use the following structure to create the list of schools.

While looping through these schools, we use the geocode() function of ggmap to retrieve the latitude and longitude based on the school name and assign these values in the corresponding missing cells of the primary_sc1 data frame.

register_google(key = "xxx")

missing_sc = primary_sc1[grep("St. Andrew’s Junior School", primary_sc1$location_name):grep("St. Stephen’s School", primary_sc1$location_name), 1]

for (i in missing_sc){

coords = geocode(i)

primary_sc1[grep(i, primary_sc1$location_name), 3] = coords$lat

primary_sc1[grep(i, primary_sc1$location_name), 4] = coords$lon

}We should check if we still have missing coordinates. Note that we are missing information in the full_address column, however, it is not a great deal since we will be using the coordinates to create the sf objects later on.

primary_sc1 %>%

filter(is.na(primary_sc1$lat) == TRUE)We are still missing coordinates for three schools. We will be filling these values manually.

primary_sc1[grep("St. Anthony’s Primary School", primary_sc1$location_name), 3:4] = list(1.3796337949311097, 103.75033229288428)

primary_sc1[grep("St. Gabriel’s Primary School", primary_sc1$location_name), 3:4] = list(1.3499742816603595, 103.86268328706147)

primary_sc1[grep("St. Stephen’s School", primary_sc1$location_name), 3:4] = list(1.3189794629543288, 103.9179118196036)We are now good to go, we can proceed to creating a sf data frame, however, before doing so, I will export the data frame into my data folder to avoid using the Google Maps API again (it is free under a limited amount of queries).

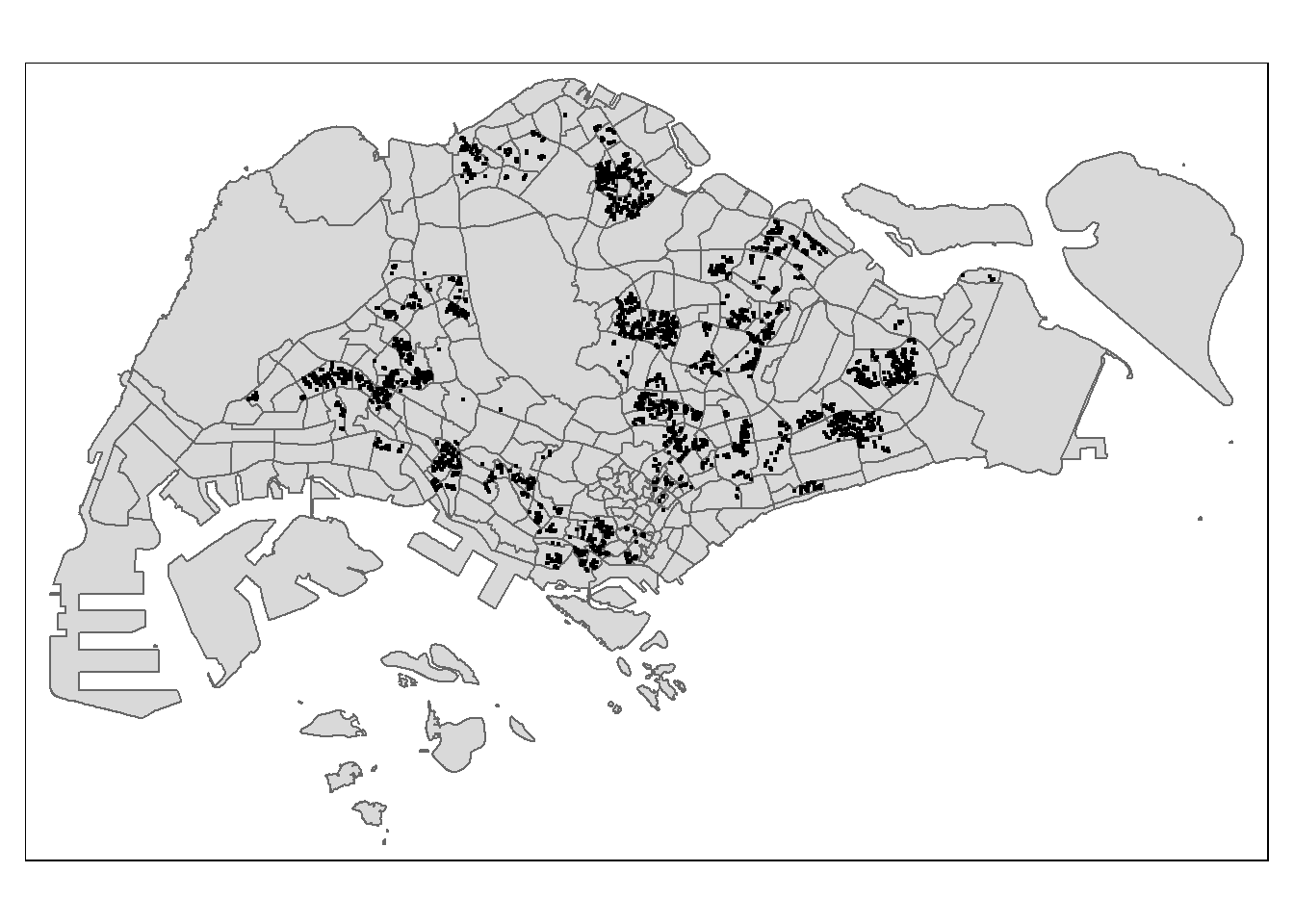

write.csv(primary_sc1, "data/aspatial/primary_schools.csv", row.names = FALSE)primary_schools = read_csv("data/aspatial/primary_schools.csv")3.2.4 Creating the sf data frame

primary_schools.sf = st_as_sf(primary_schools,

coords = c("lon", "lat"),

crs = 4326) %>%

st_transform(3414)3.2.5 Visualizing the data

tmap_mode("plot")

tm_shape(mpsz.sf)+

tm_polygons()+

tm_shape(primary_schools.sf)+

tm_dots()

3.2.6 Creating a field with the Top 20 Primary Schools

We will now create an additional variable that indicates whether one primary school is considered good or not. I made the selection factor be whether the primary school is in the Top 20 primary schools or not. I extracted from the internet the Top 20 list that you may find below. With this list, we can create a dummy variable that stores a 1 (0) if the school is part (or not) of the Top 20.

top20_sc = c("Methodist Girls’ School (Primary)", "Catholic High School", "Tao Nan School", "Pei Hwa Presbyterian Primary School", "Holy Innocents’ Primary School", "Nan Hua Primary School", "CHIJ St. Nicholas Girls’ School", "Admiralty Primary School", "St. Hilda’s Primary School", "Ai Tong School", "Anglo-Chinese School (Junior)", "Chongfu School", "St. Joseph’s Institution Junior", "Anglo-Chinese School (Primary)", "Singapore Chinese Girls’ Primary School", "Nanyang Primary School", "South View Primary School", "Pei Chun Public School", "Kong Hwa School", "Rosyth School")

primary_schools.sf["top20"] = ifelse(primary_schools.sf$location_name %in% top20_sc == TRUE,

1, 0)Note that I originally forgot to create this variable and am doing it after having ran all the regressions. Unfortunately, since my computer crashes most of the time when trying to perform the geographic regression, I won’t be able to include it now, but I still made sure to include how to create this variable.

3.3 Shopping Mall data

We encounter the same problem with the shopping malls as when looking for a data set of primary schools, so I decided to scrape Wikipedia for this one.

We perform very similar steps as the previous section on primary schools, so I won’t run you through every step.

3.3.1 Scraping Wikipedia to extract the list of malls in Singapore

url = "https://en.wikipedia.org/wiki/List_of_shopping_malls_in_Singapore"

res = GET(url)

wiki_read <- xml2::read_html(res, encoding = "UTF-8")

testt = wiki_read %>%

html_nodes("div.div-col") %>%

html_elements("ul") %>%

html_elements("li") %>%

html_text()

malls = str_replace(testt, "\\[.*]", "")3.3.2 Using the OneMap API to retrieve the malls’ coordinates

shp_malls = data.frame()

url = "https://developers.onemap.sg/commonapi/search"

for (i in 1:length(malls)){

query <- list("searchVal" = malls[i],

"returnGeom" = "Y",

"getAddrDetails" = "Y")

result <- GET(url, query = query)

if (length(content(result)$results) == 0){

new_row = c(malls[i],

NA,

NA,

NA)

shp_malls = rbind(shp_malls, new_row)

} else{

new_row = c(malls[i],

content(result)$results[[1]]$ADDRESS,

content(result)$results[[1]]$LATITUDE,

content(result)$results[[1]]$LONGITUDE)

shp_malls = rbind(shp_malls, new_row)

}

}

colnames(shp_malls) = c("location_name", "full_address", "lat", "lon")sum(is.na(shp_malls$full_address))3.3.3 Filling missing information with ggmap

shp_malls %>%

filter(is.na(shp_malls$full_address) == TRUE) %>%

select(1)Before using the Google Maps API, I decided to research about these nine malls and found that:

The PoMo mall is now called GR.iD, so we shall change the name in the data frame

The KINEX mall is found under the name KINEX, so we shall remove the additional information from the name

The Paya Lebar Quarter mall is found under the name PLQ mall on Google Maps, so we shall change the name in the data frame

shp_malls[grep("PoMo", shp_malls$location_name), 1] = "GR.iD";

shp_malls[grep("KINEX", shp_malls$location_name), 1] = "KINEX";

shp_malls[grep("PLQ", shp_malls$location_name), 1] = "PLQ mall"register_google(key = "xxx")

missing_malls = shp_malls %>%

filter(is.na(shp_malls$full_address) == TRUE) %>%

`$`(location_name)

for (i in list(missing_malls)[[1]]){

coords = geocode(i)

shp_malls[grep(i, shp_malls$location_name), 3] = coords$lat

shp_malls[grep(i, shp_malls$location_name), 4] = coords$lon

}shp_malls %>%

filter(is.na(shp_malls$lat) == TRUE)3.3.4 Filling missing information manually

We are still missing coordinates of the KINEX mall. We will be filling these values manually.

shp_malls[grep("KINEX", shp_malls$location_name), 3:4] = c(1.314893715213727, 103.89480904154526)We are now good to go, we can proceed to creating a sf data frame, however, before doing so, I will export the data frame into my data folder to avoid using the Google Maps API again (it is free under a limited amount of queries).

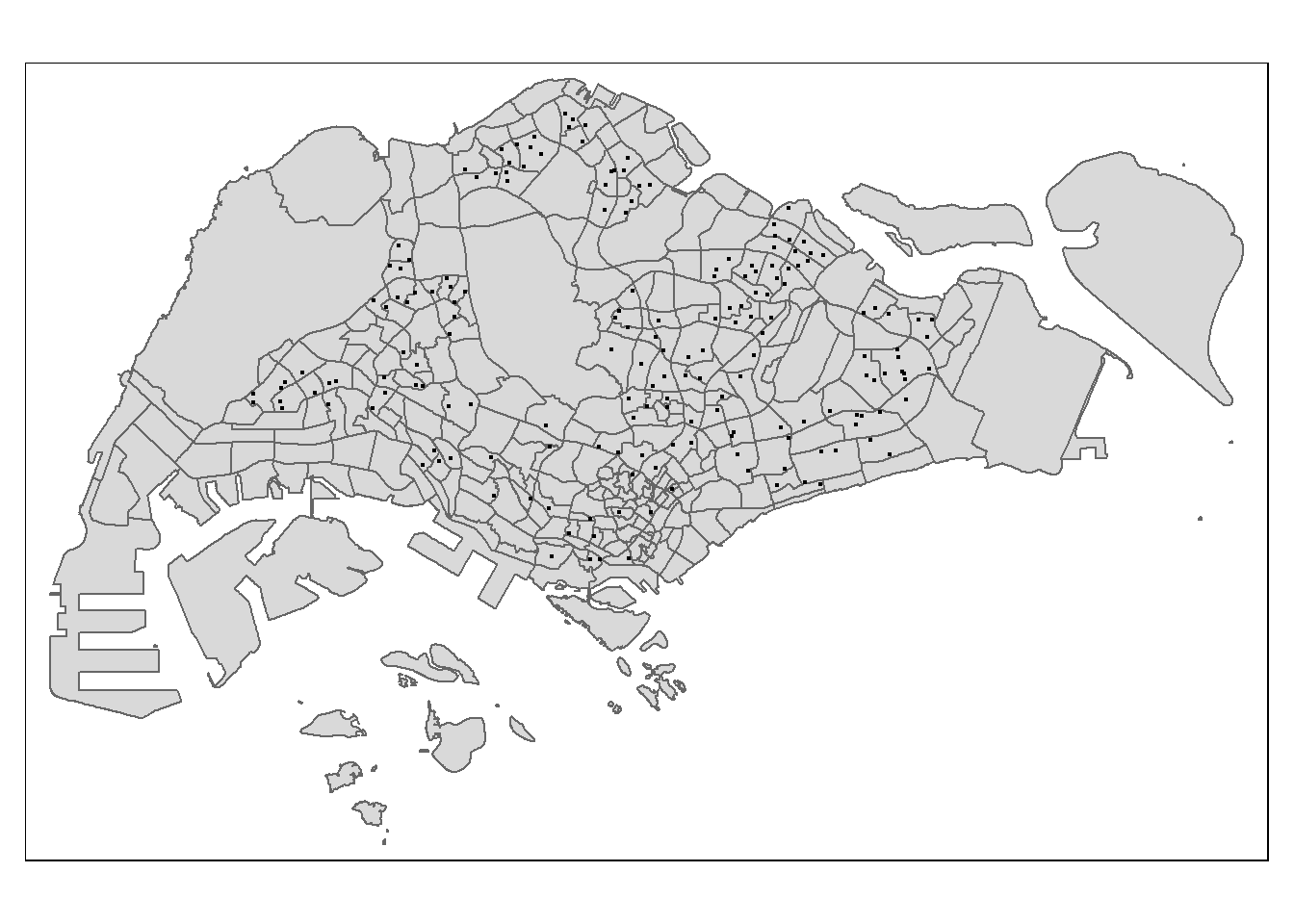

write.csv(shp_malls, "data/aspatial/shopping_malls.csv", row.names = FALSE)shp_malls = read_csv("data/aspatial/shopping_malls.csv")3.3.5 Creating an sf data frame

shp_malls.sf = st_as_sf(shp_malls,

coords = c("lon", "lat"),

crs = 4326) %>%

st_transform(3414)3.3.6 Visualizing the data

tmap_mode("plot")

tm_shape(mpsz.sf)+

tm_polygons()+

tm_shape(shp_malls.sf)+

tm_dots()

4 Preparing the final data frame

In this section, we will prepare the following variables:

Proximity to CBD ; Proximity to eldercare ; Proximity to foodcourt/hawker centres ; Proximity to MRT ; Proximity to park ; Proximity to good primary school ; Proximity to shopping mall ; Proximity to supermarket

Numbers of kindergartens within 350m ; Numbers of childcare centres within 350m ; Numbers of bus stop within 350m ; Numbers of primary school within 1km

4.1 Proximity variables

4.1.1 Proximity to CBD

We will consider the Downtown Core planning area to be the Central Business District. Given the previous statement, we will compute the distance of each HDB flats to the CBD area.

The first step is to compute the nearest feature between the HDB flats and CDB area. With this, we then can create a column that stores the distance between the two features (e.g., distance between each flat and nearest part of the CBD).

cbd_zone.sf = mpsz.sf %>%

filter(PLN_AREA_N == "DOWNTOWN CORE")

nearest = st_nearest_feature(rp_3rooms.sf, cbd_zone.sf)

rp_3rooms.sf$distance_to_cdb = as.numeric(

st_distance(rp_3rooms.sf,

cbd_zone.sf[nearest,],

by_element=TRUE) )Note that I had forgot to compute this variable when running my regressions, so you won’t see it later but I made sure to include the code to compute it.

4.1.2 Proximity to eldercare

eldercare.sf = retrieved_variables.sf %>%

filter(variable_name == "eldercare")nearest = st_nearest_feature(rp_3rooms.sf, eldercare.sf)

rp_3rooms.sf$distance_to_eldercare = as.numeric(

st_distance(rp_3rooms.sf,

eldercare.sf[nearest,],

by_element=TRUE) )4.1.3 Proximity to food court / hawker centres

hawker_centres.sf = retrieved_variables.sf %>%

filter(variable_name == "hawker_centres")nearest = st_nearest_feature(rp_3rooms.sf, hawker_centres.sf)

rp_3rooms.sf$distance_to_food = as.numeric(

st_distance(rp_3rooms.sf,

hawker_centres.sf[nearest,],

by_element=TRUE) )4.1.4 Proximity to MRT

nearest = st_nearest_feature(rp_3rooms.sf, mrt_exits.sf)

rp_3rooms.sf$distance_to_mrt = as.numeric(

st_distance(rp_3rooms.sf,

mrt_exits.sf[nearest,],

by_element=TRUE) )4.1.5 Proximity to park

parks.sf = retrieved_variables.sf %>%

filter(variable_name == "parks")nearest = st_nearest_feature(rp_3rooms.sf, parks.sf)

rp_3rooms.sf$distance_to_park = as.numeric(

st_distance(rp_3rooms.sf,

parks.sf[nearest,],

by_element=TRUE) )4.1.6 Proximity to good primary school

nearest = st_nearest_feature(rp_3rooms.sf, primary_schools.sf)

rp_3rooms.sf$top20_schools = as.numeric(

st_distance(rp_3rooms.sf,

primary_schools.sf[nearest,],

by_element=TRUE) )4.1.7 Proximity to shopping mall

nearest = st_nearest_feature(rp_3rooms.sf, shp_malls.sf)

rp_3rooms.sf$distance_to_mall = as.numeric(

st_distance(rp_3rooms.sf,

shp_malls.sf[nearest,],

by_element=TRUE) )4.1.8 Proximity to supermarket

nearest = st_nearest_feature(rp_3rooms.sf, supermarkets.sf)

rp_3rooms.sf$distance_to_supermarkets = as.numeric(

st_distance(rp_3rooms.sf,

supermarkets.sf[nearest,],

by_element=TRUE) )4.2 Number of … within given distance

The first step is to create buffers for each HDB flat. We create two buffers, one of 350m and one of 1000m (1km).

buffer_350 = st_buffer(rp_3rooms.sf, 350)

buffer_1000 = st_buffer(rp_3rooms.sf, 1000)Using these buffers will allow us to compute the number of a given variable inside the buffer.

4.2.1 Numbers of kindergartens within 350m

kindergartens.sf = retrieved_variables.sf %>%

filter(variable_name == "kindergartens")rp_3rooms.sf$nb_of_kindergartens = lengths(

st_intersects(buffer_350, kindergartens.sf))4.2.2 Numbers of childcare centres within 350m

child_care.sf = retrieved_variables.sf %>%

filter(variable_name == "child_care")rp_3rooms.sf$nb_of_childcare = lengths(

st_intersects(buffer_350, child_care.sf))4.2.3 Numbers of bus stop within 350m

rp_3rooms.sf$nb_of_bus_stops = lengths(

st_intersects(buffer_350, bus_stops.sf))4.2.4 Numbers of primary school within 1km

rp_3rooms.sf$nb_of_primary_schools = lengths(

st_intersects(buffer_1000, primary_schools.sf))4.3 Saving the data

We will save the data into a rds file to avoid running all the previous code every time we would like to work on the assignment since some of these steps are very lengthy.

write_rds(rp_3rooms.sf, "data/geospatial/final_dataset.rds")rp_3rooms.sf = read_rds("data/geospatial/final_dataset.rds")4.4 Check for missing data

sum(is.na(rp_3rooms.sf))[1] 0There is no missing that so we can move on to creating the hedonistic pricing models.

5 Hedonic Pricing model

The first step to our hedonistic pricing model is to clean the name of our fields. We do so using the rename function.

rp_3rooms.sf = rp_3rooms.sf %>%

rename("01_to_03" = "01 TO 03", "04_to_06" = "04 TO 06", "07_to_09" = "07 TO 09",

"10_to_12" = "10 TO 12", "13_to_15" = "13 TO 15", "16_to_18" = "16 TO 18",

"19_to_21" = "19 TO 21", "22_to_24" = "22 TO 24", "25_to_27" = "25 TO 27",

"28_to_30" = "28 TO 30", "31_to_33" = "31 TO 33", "34_to_36" = "34 TO 36",

"37_to_39" = "37 TO 39", "40_to_42" = "40 TO 42", "43_to_45" = "43 TO 45",

"46_to_48" = "46 TO 48", "New_Generation" = "New Generation",

"Model_A" = "Model A", "Premium_Apartment" = "Premium Apartment")5.1 Splitting the data

Now that are fields are cleaned, we should split the data into two data frames. One data frame used for training and one for testing. We do so using the filter and grepl functions.

Note that you can use regular expressions to be more efficient when trying to string match.

rp_analysis.sf = rp_3rooms.sf %>%

filter(grepl("202[12]", month))

rp_testing.sf = rp_3rooms.sf %>%

filter(grepl("2023", month))6 Conventional OLS method

The first analysis we will perform is the “standard” or “conventional” OLS. This is a multiple linear regression that takes into account only our independent variables. It does not use geographical location as a determinant of the resale price of an HDB flat.

Before running the analysis, there are a few preliminary steps. We should check for: non-linear relationships between the independent variables and the dependent variables; multicollinearity; heteroscedasticity.

6.1 Multicollinearity

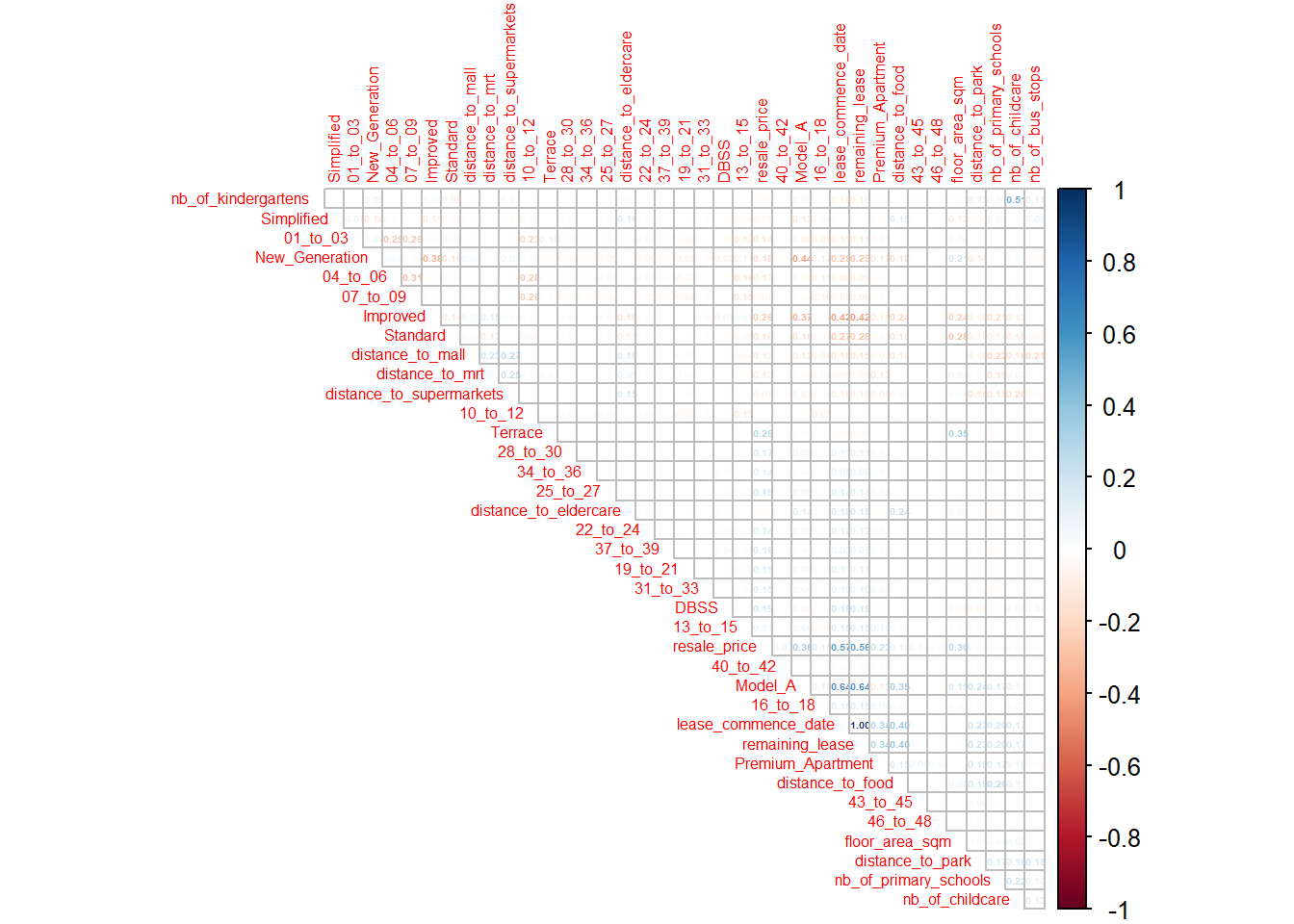

Using the corrplot package and its corrplot function, we get a clean correlation matrix that indicates positive correlation with the color blue and negative with red.

cor_test = cor(rp_3rooms.sf %>%

st_drop_geometry() %>%

select(-c(1, 22, 23)))

corrplot::corrplot(cor_test, diag = FALSE, order = "AOE", tl.pos = "td",

tl.cex = 0.5, method = "number", type = "upper",

number.cex = 0.3)

We find that there is a perfect correlation between the lease_commence_date and remaining_lease variables, so we will include only one of the two in our OLS regression.

6.2 MLR model

To test for linearity and heteroscedasticity, we first need to run the regression. Note that using the next code chunk, you can retrieve the list of variables in our data frame.

colnames(rp_3rooms.sf)rp_analysis.mlr <- lm(formula = resale_price ~ floor_area_sqm + remaining_lease +

`01_to_03` + `04_to_06` + `07_to_09` + `10_to_12` + `13_to_15` +

`16_to_18` + `19_to_21` + `22_to_24` + `25_to_27` + `28_to_30` +

`31_to_33` + `34_to_36` + `37_to_39` + `40_to_42` + `43_to_45` +

`New_Generation` + Improved + `Model_A` + Simplified + Standard +

`Premium_Apartment` + DBSS + distance_to_eldercare +

distance_to_food + distance_to_mrt + distance_to_park +

distance_to_mall + distance_to_supermarkets + nb_of_kindergartens +

nb_of_childcare + nb_of_bus_stops + nb_of_primary_schools,

data = rp_analysis.sf)

stargazer(rp_analysis.mlr, type="text")

====================================================

Dependent variable:

---------------------------

resale_price

----------------------------------------------------

floor_area_sqm 3,946.537***

(93.530)

remaining_lease 2,754.191***

(61.207)

`01_to_03` -257,839.693***

(52,620.249)

`04_to_06` -248,405.940***

(52,616.441)

`07_to_09` -241,109.221***

(52,616.860)

`10_to_12` -232,833.112***

(52,618.266)

`13_to_15` -224,504.937***

(52,626.152)

`16_to_18` -205,120.927***

(52,659.851)

`19_to_21` -174,800.777***

(52,757.140)

`22_to_24` -158,664.647***

(52,852.098)

`25_to_27` -127,272.290**

(52,922.790)

`28_to_30` -96,167.414*

(53,084.925)

`31_to_33` -79,389.789

(53,208.006)

`34_to_36` -58,544.715

(53,510.236)

`37_to_39` -47,678.241

(53,296.651)

`40_to_42` -28,303.576

(56,745.135)

`43_to_45` 27,962.420

(58,710.939)

New_Generation -429,929.634***

(10,800.164)

Improved -423,909.938***

(11,010.310)

Model_A -422,627.638***

(10,789.771)

Simplified -402,908.906***

(11,227.145)

Standard -413,750.267***

(11,376.659)

Premium_Apartment -399,095.702***

(11,179.217)

DBSS -348,968.293***

(11,638.597)

distance_to_eldercare -16.072***

(0.945)

distance_to_food -19.181***

(1.600)

distance_to_mrt -27.268***

(1.376)

distance_to_park -20.903***

(1.530)

distance_to_mall -11.363***

(1.352)

distance_to_supermarkets -5.749**

(2.591)

nb_of_kindergartens 4,512.822***

(642.853)

nb_of_childcare -2,789.315***

(295.193)

nb_of_bus_stops -910.529***

(188.399)

nb_of_primary_schools -7,938.305***

(369.917)

Constant 675,321.297***

(54,923.892)

----------------------------------------------------

Observations 12,608

R2 0.638

Adjusted R2 0.637

Residual Std. Error 52,512.632 (df = 12573)

F Statistic 652.049*** (df = 34; 12573)

====================================================

Note: *p<0.1; **p<0.05; ***p<0.01It seems like our regression is statistically significant; the F-statistic is very high which indicates that the model is globally significant at a 1% significance level. Looking at the significance of our variables, we realize that most of them are significant apart from the following dummy variables: 43_to_45, 40_to_42, 37_to_39, 34_to_36, and 31_to_33. We may want to drop these variables in the future. Now, let’s look at the R-squared of this model. We find that the chosen variables explain about 64% of the total variation in the resale price. It is somewhat a good fit but i think we can improve that with a geographically weighted model.

6.3 Linearity

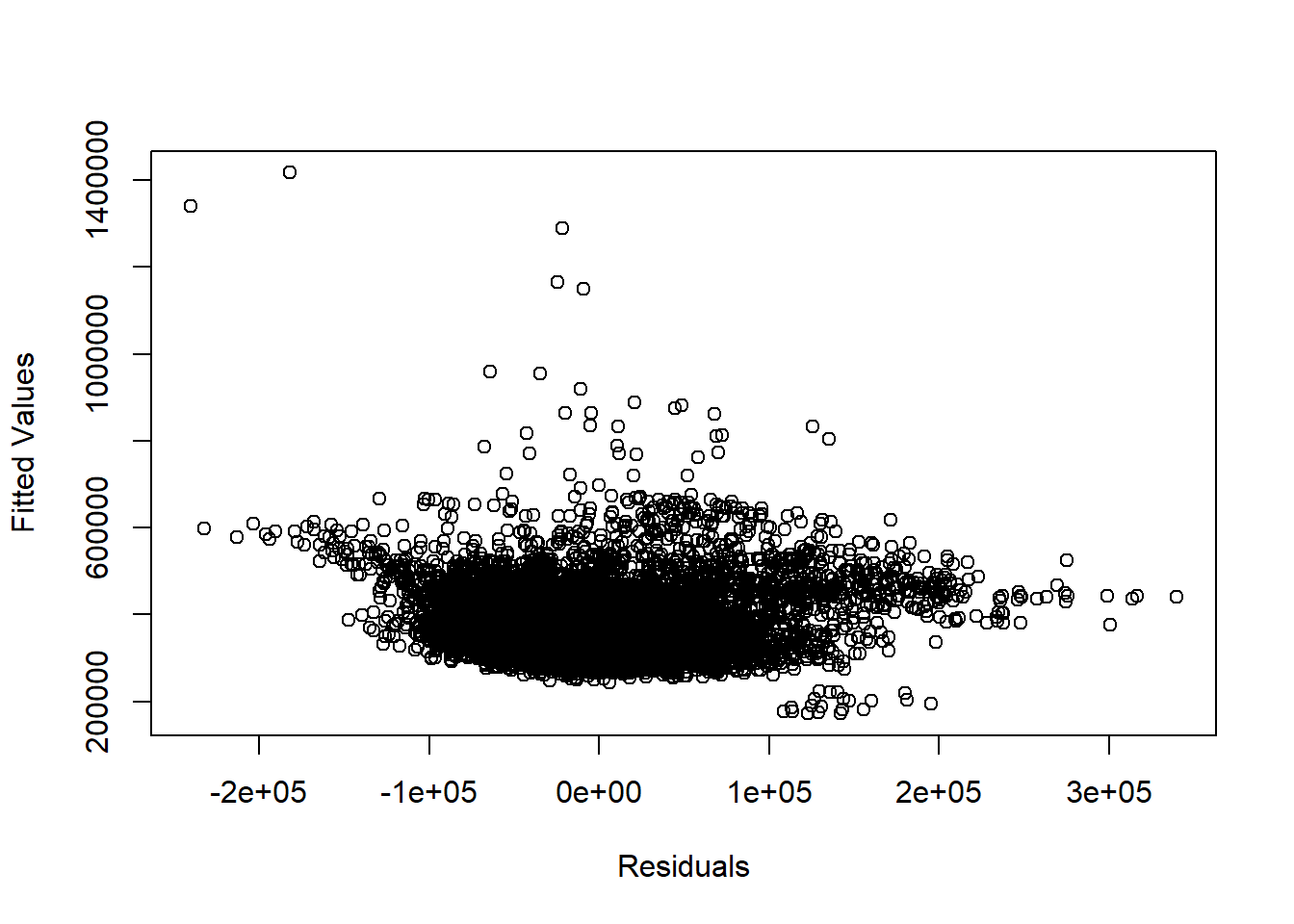

To check for linearity, we can plot the residuals agaisnt the fitted values of the regression. If we observe a random cloud of points, it means that there are no non-linearity issues.

plot(residuals(rp_analysis.mlr), fitted.values(rp_analysis.mlr),

xlab="Residuals", ylab="Fitted Values")

Looking at the above scatter plot, it seems that the cloud is not so random, meaning that it is likely that we observe non-linear relationships between some independent variables and the dependent variable.

6.4 Heteroscedasticity

To check if there is heteroscedasticity (we want homoscedasticity), we will use the Goldfeld-Quandt test.

gqtest(rp_analysis.mlr)

Goldfeld-Quandt test

data: rp_analysis.mlr

GQ = 0.9281659246132, df1 = 6269, df2 = 6269, p-value = 0.99841450489

alternative hypothesis: variance increases from segment 1 to 2The p-value proves that there is homoscedasticity of the residuals. So, we can consider our previous findings to be accurate under the Gauss-Markov theorem.

7 GWR methods

We will now moce on to our first geographically weighted regression. We will be using the gwr.basic function of the GWmodel package. We shall use an adaptive bandwidth for our model.

7.1 Converting sf data frame to SpatialPointDataFrame

The first step to what I described above is to convert our sf data frame into a SpatialPoint data frame.

rp_analysis.sp = as_Spatial(rp_analysis.sf)7.2 Computing adaptive bandwidth

Once this is done, we use the bw.gwr function to compute the bandwidth that we should use in the regression.

bw_analysis_adaptive <- bw.gwr(resale_price ~ floor_area_sqm + remaining_lease +

X01_to_03 + X04_to_06 + X07_to_09 + X10_to_12 + X13_to_15 +

X16_to_18 + X19_to_21 + X22_to_24 + X25_to_27 + X28_to_30 +

X31_to_33 + X34_to_36 + X37_to_39 + X40_to_42 + X43_to_45 +

New_Generation + Improved + Model_A + Simplified + Standard +

Premium_Apartment + DBSS + distance_to_eldercare +

distance_to_food + distance_to_mrt + distance_to_park +

distance_to_mall + distance_to_supermarkets + nb_of_kindergartens +

nb_of_childcare + nb_of_bus_stops + nb_of_primary_schools,

data = rp_analysis.sp, approach="CV", kernel="gaussian",

adaptive=TRUE, longlat=FALSE)write_rds(bw_analysis_adaptive, "data/model/bw_analysis_adaptive.rds")bw_adaptive <- read_rds("data/model/bw_analysis_adaptive.rds")

bw_adaptive[1] 1357It looks like we should use a bandwidth of 1357 neighbor points.

7.3 Constructing the adaptive bandwidth gwr model

Now, we can use the gwr.basic function to obtain our regression.

gwr_adaptive <- gwr.basic(formula = resale_price ~ floor_area_sqm + remaining_lease + X01_to_03 +

X04_to_06 + X07_to_09 + X10_to_12 + X13_to_15 + X16_to_18 + X19_to_21 +

X22_to_24 + X25_to_27 + X28_to_30 + X31_to_33 + X34_to_36 + X37_to_39 +

X40_to_42 + X43_to_45 + New_Generation + Improved + Model_A + Simplified +

Standard + Premium_Apartment + DBSS + distance_to_eldercare + distance_to_food +

distance_to_mrt + distance_to_park + distance_to_mall + distance_to_supermarkets +

nb_of_kindergartens + nb_of_childcare + nb_of_bus_stops + nb_of_primary_schools,

data = rp_analysis.sp, bw=bw_adaptive, kernel = 'gaussian', adaptive=TRUE,

longlat = FALSE)write_rds(gwr_adaptive, "data/model/gwr_analysis_adaptive.rds")gwr_adaptive = read_rds("data/model/gwr_analysis_adaptive.rds")

gwr_adaptive ***********************************************************************

* Package GWmodel *

***********************************************************************

Program starts at: 2023-03-20 00:51:55

Call:

gwr.basic(formula = resale_price ~ floor_area_sqm + remaining_lease +

X01_to_03 + X04_to_06 + X07_to_09 + X10_to_12 + X13_to_15 +

X16_to_18 + X19_to_21 + X22_to_24 + X25_to_27 + X28_to_30 +

X31_to_33 + X34_to_36 + X37_to_39 + X40_to_42 + X43_to_45 +

New_Generation + Improved + Model_A + Simplified + Standard +

Premium_Apartment + DBSS + distance_to_eldercare + distance_to_food +

distance_to_mrt + distance_to_park + distance_to_mall + distance_to_supermarkets +

nb_of_kindergartens + nb_of_childcare + nb_of_bus_stops +

nb_of_primary_schools, data = rp_analysis.sp, bw = bw_adaptive,

kernel = "gaussian", adaptive = TRUE, longlat = FALSE)

Dependent (y) variable: resale_price

Independent variables: floor_area_sqm remaining_lease X01_to_03 X04_to_06 X07_to_09 X10_to_12 X13_to_15 X16_to_18 X19_to_21 X22_to_24 X25_to_27 X28_to_30 X31_to_33 X34_to_36 X37_to_39 X40_to_42 X43_to_45 New_Generation Improved Model_A Simplified Standard Premium_Apartment DBSS distance_to_eldercare distance_to_food distance_to_mrt distance_to_park distance_to_mall distance_to_supermarkets nb_of_kindergartens nb_of_childcare nb_of_bus_stops nb_of_primary_schools

Number of data points: 12608

***********************************************************************

* Results of Global Regression *

***********************************************************************

Call:

lm(formula = formula, data = data)

Residuals:

Min 1Q Median 3Q Max

-240462.8895745 -33035.5338021 -4099.0414119 26337.1945223 339652.2172861

Coefficients:

Estimate Std. Error t value

(Intercept) 6.75321297326e+05 5.49238919832e+04 12.29558

floor_area_sqm 3.94653697865e+03 9.35301810120e+01 42.19533

remaining_lease 2.75419062799e+03 6.12068280832e+01 44.99809

X01_to_03 -2.57839692943e+05 5.26202487910e+04 -4.90001

X04_to_06 -2.48405940332e+05 5.26164408917e+04 -4.72107

X07_to_09 -2.41109221218e+05 5.26168599751e+04 -4.58236

X10_to_12 -2.32833112045e+05 5.26182655337e+04 -4.42495

X13_to_15 -2.24504937356e+05 5.26261515769e+04 -4.26603

X16_to_18 -2.05120926567e+05 5.26598505686e+04 -3.89521

X19_to_21 -1.74800777430e+05 5.27571400718e+04 -3.31331

X22_to_24 -1.58664646692e+05 5.28520975873e+04 -3.00205

X25_to_27 -1.27272290209e+05 5.29227901000e+04 -2.40487

X28_to_30 -9.61674142266e+04 5.30849249047e+04 -1.81158

X31_to_33 -7.93897892485e+04 5.32080061172e+04 -1.49206

X34_to_36 -5.85447153375e+04 5.35102358989e+04 -1.09408

X37_to_39 -4.76782410918e+04 5.32966506954e+04 -0.89458

X40_to_42 -2.83035759235e+04 5.67451351102e+04 -0.49878

X43_to_45 2.79624196753e+04 5.87109389443e+04 0.47627

New_Generation -4.29929633982e+05 1.08001636301e+04 -39.80770

Improved -4.23909938462e+05 1.10103100883e+04 -38.50118

Model_A -4.22627637926e+05 1.07897707978e+04 -39.16929

Simplified -4.02908905991e+05 1.12271446103e+04 -35.88703

Standard -4.13750267457e+05 1.13766593423e+04 -36.36834

Premium_Apartment -3.99095701534e+05 1.11792168610e+04 -35.69979

DBSS -3.48968293074e+05 1.16385974404e+04 -29.98371

distance_to_eldercare -1.60720547849e+01 9.44565101400e-01 -17.01530

distance_to_food -1.91812838402e+01 1.59990807892e+00 -11.98899

distance_to_mrt -2.72683047489e+01 1.37643630698e+00 -19.81080

distance_to_park -2.09027776390e+01 1.53021979062e+00 -13.65998

distance_to_mall -1.13632168038e+01 1.35152546069e+00 -8.40770

distance_to_supermarkets -5.74949826288e+00 2.59131049154e+00 -2.21876

nb_of_kindergartens 4.51282191853e+03 6.42853416663e+02 7.01999

nb_of_childcare -2.78931461657e+03 2.95193263547e+02 -9.44911

nb_of_bus_stops -9.10529320454e+02 1.88398960078e+02 -4.83298

nb_of_primary_schools -7.93830473583e+03 3.69917094138e+02 -21.45969

Pr(>|t|)

(Intercept) < 2.22e-16 ***

floor_area_sqm < 2.22e-16 ***

remaining_lease < 2.22e-16 ***

X01_to_03 9.7027e-07 ***

X04_to_06 2.3714e-06 ***

X07_to_09 4.6419e-06 ***

X10_to_12 9.7277e-06 ***

X13_to_15 2.0043e-05 ***

X16_to_18 9.8624e-05 ***

X19_to_21 0.00092459 ***

X22_to_24 0.00268695 **

X25_to_27 0.01619270 *

X28_to_30 0.07007547 .

X31_to_33 0.13570727

X34_to_36 0.27393892

X37_to_39 0.37102753

X40_to_42 0.61794016

X43_to_45 0.63388836

New_Generation < 2.22e-16 ***

Improved < 2.22e-16 ***

Model_A < 2.22e-16 ***

Simplified < 2.22e-16 ***

Standard < 2.22e-16 ***

Premium_Apartment < 2.22e-16 ***

DBSS < 2.22e-16 ***

distance_to_eldercare < 2.22e-16 ***

distance_to_food < 2.22e-16 ***

distance_to_mrt < 2.22e-16 ***

distance_to_park < 2.22e-16 ***

distance_to_mall < 2.22e-16 ***

distance_to_supermarkets 0.02652079 *

nb_of_kindergartens 2.3329e-12 ***

nb_of_childcare < 2.22e-16 ***

nb_of_bus_stops 1.3609e-06 ***

nb_of_primary_schools < 2.22e-16 ***

---Significance stars

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 52512.6319494 on 12573 degrees of freedom

Multiple R-squared: 0.638110548684

Adjusted R-squared: 0.637131924541

F-statistic: 652.048647601 on 34 and 12573 DF, p-value: < 2.220446049e-16

***Extra Diagnostic information

Residual sum of squares: 34671009513671.7

Sigma(hat): 52443.8530960874

AIC: 309884.79383796

AICc: 309885.005754276

BIC: 297884.624088431

***********************************************************************

* Results of Geographically Weighted Regression *

***********************************************************************

*********************Model calibration information*********************

Kernel function: gaussian

Adaptive bandwidth: 1357 (number of nearest neighbours)

Regression points: the same locations as observations are used.

Distance metric: Euclidean distance metric is used.

****************Summary of GWR coefficient estimates:******************

Min. 1st Qu.

Intercept 2.088671317153e+05 5.998777821386e+05

floor_area_sqm 2.387596184396e+03 3.011792694837e+03

remaining_lease 1.650595095776e+03 2.274857903910e+03

X01_to_03 -4.125708586698e+05 -2.784588036565e+05

X04_to_06 -4.068159545249e+05 -2.683798900342e+05

X07_to_09 -4.006193509978e+05 -2.602046448123e+05

X10_to_12 -3.935242242099e+05 -2.540259354664e+05

X13_to_15 -3.867704592358e+05 -2.467461124008e+05

X16_to_18 -3.819997184095e+05 -2.338750640869e+05

X19_to_21 -2.964059154308e+05 -2.220531501956e+05

X22_to_24 -3.649893746206e+05 -2.104819018812e+05

X25_to_27 -2.853860414403e+05 -1.626543832891e+05

X28_to_30 -2.303182980734e+05 -1.173174355323e+05

X31_to_33 -2.073032630430e+05 -8.982959381400e+04

X34_to_36 -1.836583433440e+05 -3.531676789320e+04

X37_to_39 -1.556916222683e+05 -3.624579821300e+04

X40_to_42 -1.712780793208e+05 -3.358187516798e+04

X43_to_45 -7.138009020763e+04 2.747964385570e+04

New_Generation -5.127199944244e+05 -4.806213822729e+05

Improved -5.283547436802e+05 -4.782857533712e+05

Model_A -5.215106668974e+05 -4.692225288231e+05

Simplified -5.117765983964e+05 -4.566366060243e+05

Standard -5.122701874786e+05 -4.870650610056e+05

Premium_Apartment -5.171516761728e+05 -4.552972105130e+05

DBSS -4.520967890435e+05 -3.945473101338e+05

distance_to_eldercare -3.552103704653e+01 -1.767308749777e+01

distance_to_food -4.750721738788e+01 -2.426292683676e+01

distance_to_mrt -8.441714936292e+01 -4.594874472560e+01

distance_to_park -4.714158550886e+01 -2.523736877992e+01

distance_to_mall -4.317256486992e+01 -2.266490011416e+01

distance_to_supermarkets -3.129997789761e+01 -1.240250557667e+01

nb_of_kindergartens -5.436984198029e+03 -1.396646680193e+03

nb_of_childcare -3.657431883636e+03 -2.087298029371e+03

nb_of_bus_stops -3.722286998323e+03 -7.040611360374e+02

nb_of_primary_schools -1.222620944114e+04 -8.801984039150e+03

Median 3rd Qu.

Intercept 7.328803950468e+05 7.686448987817e+05

floor_area_sqm 3.110976106939e+03 3.530003756797e+03

remaining_lease 2.729897834233e+03 3.840278121788e+03

X01_to_03 -2.606686783704e+05 -1.717014596296e+05

X04_to_06 -2.490137188919e+05 -1.605854500630e+05

X07_to_09 -2.403153543636e+05 -1.515558707160e+05

X10_to_12 -2.346633137276e+05 -1.424259624663e+05

X13_to_15 -2.248701154294e+05 -1.323001367930e+05

X16_to_18 -2.098182696816e+05 -1.230095711217e+05

X19_to_21 -1.846562121224e+05 -9.798770711477e+04

X22_to_24 -1.595883978778e+05 -8.664688553422e+04

X25_to_27 -1.146484072791e+05 -7.768643166053e+04

X28_to_30 -7.819012993839e+04 -6.105200370822e+04

X31_to_33 -2.331412554000e+04 -5.125173759318e+03

X34_to_36 -1.249048544763e+04 9.421847206229e+03

X37_to_39 -8.295811147792e+03 7.084184171972e+03

X40_to_42 -1.668156868825e+04 -1.161035289228e+04

X43_to_45 2.795837293907e+04 2.907112282679e+04

New_Generation -4.638454179781e+05 -4.524133005150e+05

Improved -4.636809562957e+05 -4.524858689284e+05

Model_A -4.556728681692e+05 -4.387935770472e+05

Simplified -4.474541743272e+05 -4.360870343131e+05

Standard -4.601830937278e+05 -4.280247236367e+05

Premium_Apartment -4.384536201574e+05 -3.942345110791e+05

DBSS -3.681738423773e+05 -3.559240599045e+05

distance_to_eldercare -4.158176579550e+00 9.653538526030e-01

distance_to_food -1.479518395544e+01 -7.287486815558e+00

distance_to_mrt -3.310149006526e+01 -1.464564580024e+01

distance_to_park -9.230192420402e+00 -4.135212112595e+00

distance_to_mall -9.639776765486e+00 -5.368072698911e+00

distance_to_supermarkets 4.626676063351e+00 1.832193280708e+01

nb_of_kindergartens 1.675878145141e+03 5.830973161502e+03

nb_of_childcare -1.632575299240e+03 -7.590842712440e+02

nb_of_bus_stops 2.901040245698e+02 9.347433944123e+02

nb_of_primary_schools -5.874182955384e+03 -4.442668469482e+03

Max.

Intercept 891225.24752

floor_area_sqm 5894.11776

remaining_lease 6124.28975

X01_to_03 -106152.72280

X04_to_06 -99783.70603

X07_to_09 -91255.14695

X10_to_12 -79683.31829

X13_to_15 -74041.36904

X16_to_18 -66265.65674

X19_to_21 -49123.89843

X22_to_24 -45344.89297

X25_to_27 -31504.28029

X28_to_30 -31595.88351

X31_to_33 47039.27278

X34_to_36 54699.44195

X37_to_39 47452.47448

X40_to_42 14518.54968

X43_to_45 113153.14893

New_Generation -386243.18922

Improved -367407.49477

Model_A -374239.51716

Simplified -367354.70212

Standard -333103.72203

Premium_Apartment -314222.02062

DBSS -328715.24306

distance_to_eldercare 18.96413

distance_to_food 1.04035

distance_to_mrt 31.76859

distance_to_park 8.14749

distance_to_mall 0.60805

distance_to_supermarkets 35.32732

nb_of_kindergartens 12345.25653

nb_of_childcare 2143.54822

nb_of_bus_stops 2503.67622

nb_of_primary_schools 2025.88852

************************Diagnostic information*************************

Number of data points: 12608

Effective number of parameters (2trace(S) - trace(S'S)): 198.329551225858

Effective degrees of freedom (n-2trace(S) + trace(S'S)): 12409.6704487741

AICc (GWR book, Fotheringham, et al. 2002, p. 61, eq 2.33): 301063.546093158

AIC (GWR book, Fotheringham, et al. 2002,GWR p. 96, eq. 4.22): 300905.912913168

BIC (GWR book, Fotheringham, et al. 2002,GWR p. 61, eq. 2.34): 289579.898270255

Residual sum of squares: 16901673675002.7

R-square value: 0.823583521265468

Adjusted R-square value: 0.820763831500903

***********************************************************************

Program stops at: 2023-03-20 00:58:49 Looking at the results of the Geographically Weighted Regression, we obtain much better results as the R-squared is now almost 20% higher, meaning that adding the geographic weights has had a positive impact on the quality of the model.

8 Preparing coordinates data

Before moving on our last two regression models based around Random Forest, we shall prepare so additional data necessary to properly use the coming functions.

8.1 Extracting coordinates data

Using the st_coordinates function of the sf package, we extract the coordinates from the POINT geometries and store them in a rds file for later use.

coords_train <- st_coordinates(rp_analysis.sf)

coords_test <- st_coordinates(rp_testing.sf)write_rds(coords_train, "data/model/coords_train.rds" )

write_rds(coords_test, "data/model/coords_test.rds" )coords_train = read_rds("data/model/coords_train.rds")

coords_test = read_rds("data/model/coords_test.rds")8.2 Dropping geometry

We shall also create new data frames that do not store the sf geometry. Using the st_drop_geometry function, we remove the geometry column and we pipe the select function to remove unnecessary columns.

rp_analysis.no_geom = rp_analysis.sf %>%

st_drop_geometry() %>%

select(-c(1, 3, 22:23))

rp_testing.no_geom = rp_testing.sf %>%

st_drop_geometry() %>%

select(-c(1, 3, 22:23))9 Random Forest Model

We will now address how to calibrate the Random Forest model and build predictions with it.

9.1 Calibrating the Random Forest model

Before calibrating the model, we have to once again clean the column names. To do so, we use the clean_names function of the janitor package.

rp_analysis.no_geom = rp_analysis.no_geom %>%

clean_names()

rp_testing.no_geom = rp_testing.no_geom %>%

clean_names()We can now use the ranger function of the ranger package to generate the Random Forest model.

set.seed(2410)

rf <- ranger(resale_price ~ floor_area_sqm + remaining_lease +

x01_to_03 + x04_to_06 + x07_to_09 + x10_to_12 + x13_to_15 +

x16_to_18 + x19_to_21 + x22_to_24 + x25_to_27 + x28_to_30 +

x31_to_33 + x34_to_36 + x37_to_39 + x40_to_42 + x43_to_45 +

new_generation + improved + model_a + simplified + standard +

premium_apartment + dbss + distance_to_eldercare +

distance_to_food + distance_to_mrt + distance_to_park +

distance_to_mall + distance_to_supermarkets + nb_of_kindergartens +

nb_of_childcare + nb_of_bus_stops + nb_of_primary_schools,

data = rp_analysis.no_geom)rfRanger result

Call:

ranger(resale_price ~ floor_area_sqm + remaining_lease + x01_to_03 + x04_to_06 + x07_to_09 + x10_to_12 + x13_to_15 + x16_to_18 + x19_to_21 + x22_to_24 + x25_to_27 + x28_to_30 + x31_to_33 + x34_to_36 + x37_to_39 + x40_to_42 + x43_to_45 + new_generation + improved + model_a + simplified + standard + premium_apartment + dbss + distance_to_eldercare + distance_to_food + distance_to_mrt + distance_to_park + distance_to_mall + distance_to_supermarkets + nb_of_kindergartens + nb_of_childcare + nb_of_bus_stops + nb_of_primary_schools, data = rp_analysis.no_geom)

Type: Regression

Number of trees: 500

Sample size: 12608

Number of independent variables: 34

Mtry: 5

Target node size: 5

Variable importance mode: none

Splitrule: variance

OOB prediction error (MSE): 818794743.929314

R squared (OOB): 0.892255220701931 It looks like this is our best model so far and has great explanatory power. We obtain a 89% R-squared value, which by far is an improvement from our previous models.

9.2 Predicting by using test data

9.2.1 Preparing the test data

The code chunk below will be used to combine the test data with its corresponding coordinates data.

test_data = cbind(rp_testing.no_geom, coords_test)9.2.2 Predicting with test data

Next, we will use the predict function of the ranger package to predict the resale value of HDB flats using the testing data and the rf model previously calibrated.

test_pred = predict(rf, test_data,

x.var.name="X", y.var.name="Y",

local.w=1, global.w=0)9.2.3 Converting the predicting output into a data frame

The output of the predict.grf() is a vector of predicted values. It is wiser to convert it into a data frame for further visualization and analysis.

test_pred.df = as.data.frame(test_pred)In the code chunk below, cbind() is used to append the predicted values onto test_data

test_data_p <- cbind(test_data, test_pred.df)9.2.4 Calculating Root Mean Square Error

The root mean square error (RMSE) allows us to measure how far predicted values are from observed values in a regression analysis. In the code chunk below, rmse() of Metrics package is used to compute the RMSE.

rmse(test_data_p$resale_price,

test_data_p$prediction)[1] 38454.1455788506We should use this value and compare it with the one of the geographical random forest model.

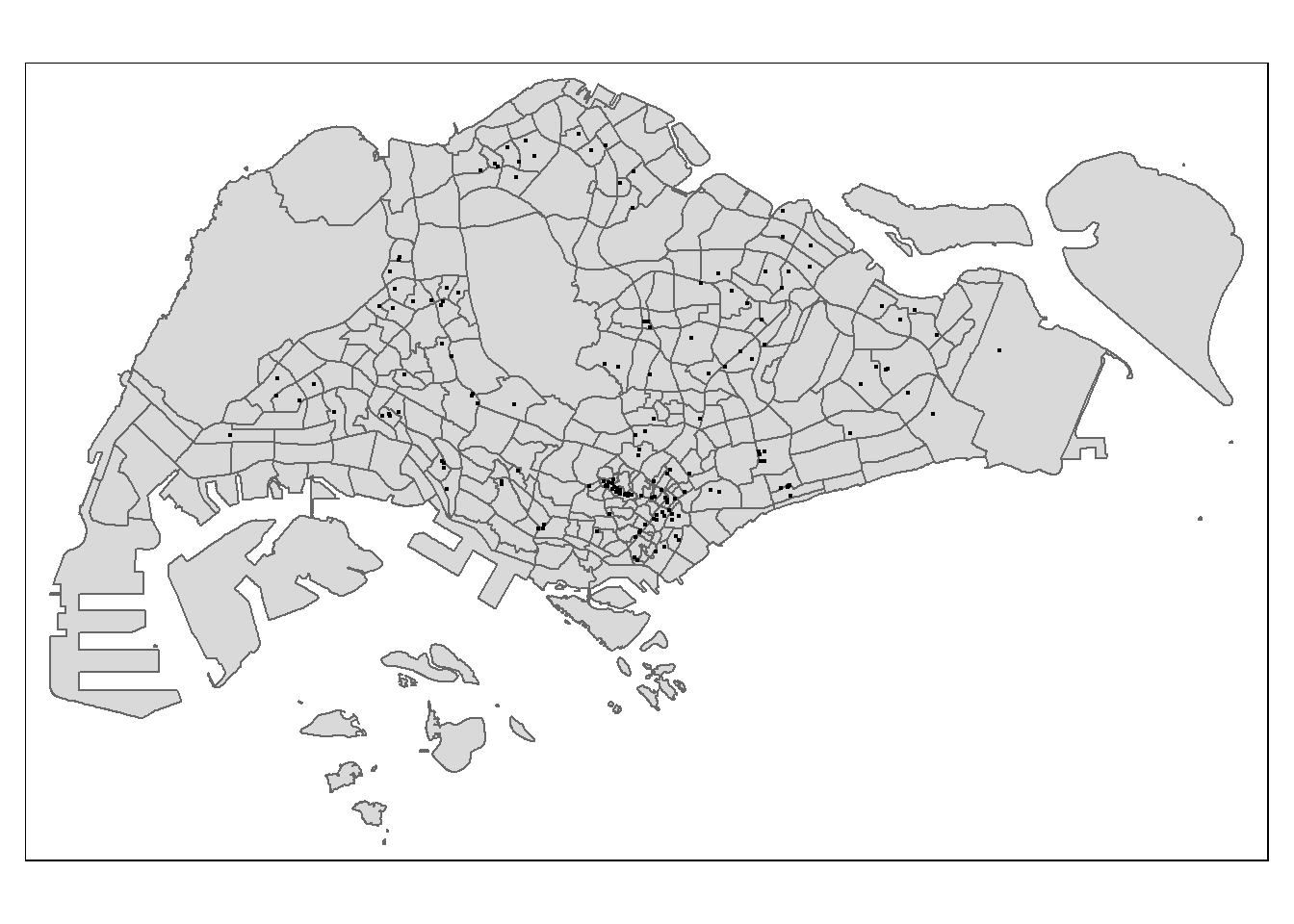

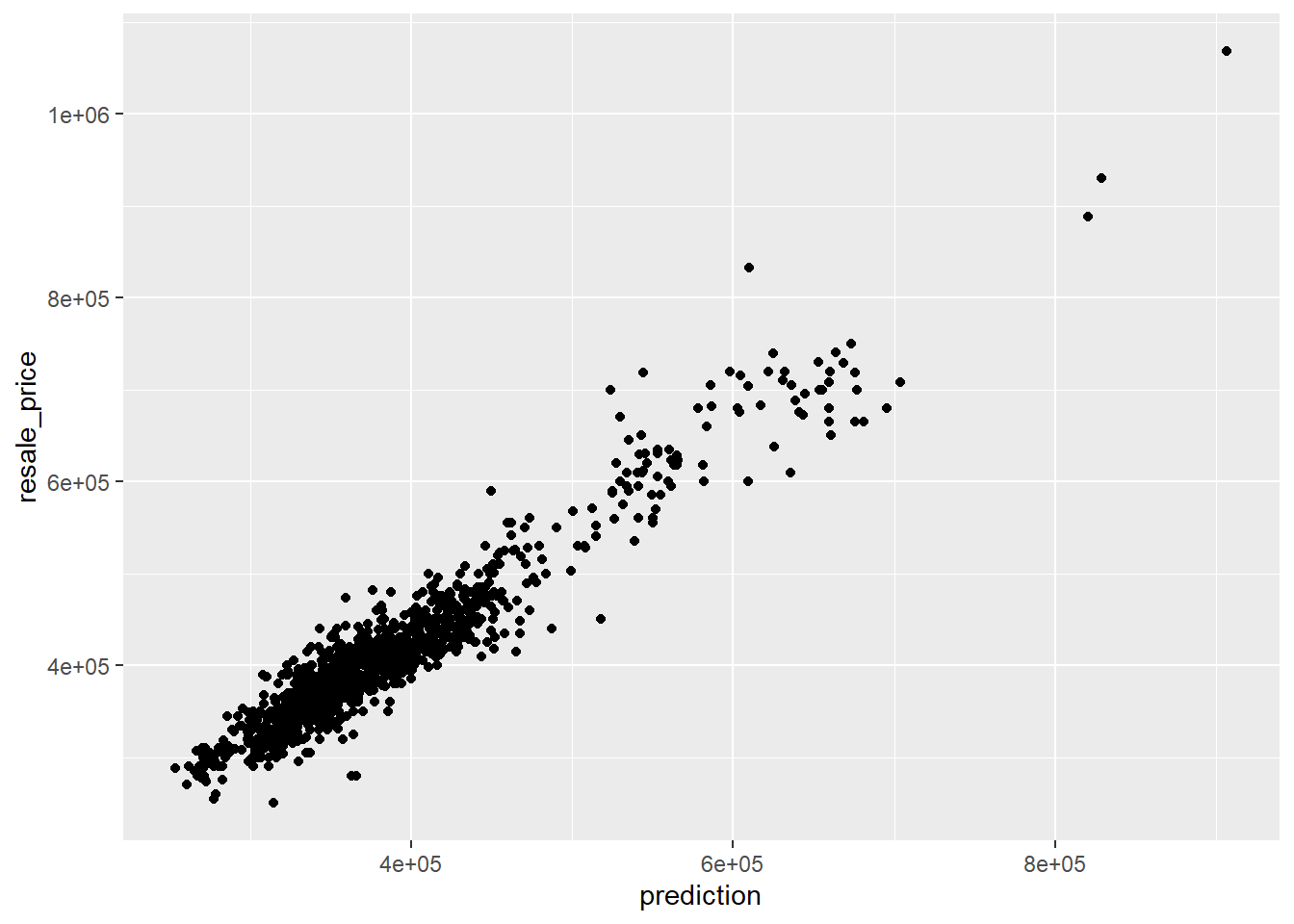

9.2.5 Visualising the predicted values

Alternatively, scatter plot can be used to visualize the actual resale price and the predicted resale price by using the code chunk below.

ggplot(data = test_data_p,

aes(x = prediction,

y = resale_price)) +

geom_point()

It looks like we have a great model since the plot shows an almost perfect linear relationship.

10 Geographical Random Forest Model

Now, we can move on to our last regression model, the Geographical Random Forest model. I provide you with the code necessary for this whole section of calibrating the model and making predictions. Unfortunately, my computer does not allow me to run the prediction as when I load the model into my environment, my computer crashes.

In this section, we use the grf function of the SpatialML package with an adaptive kernel and the previously computed adaptive bandwidth.

10.1 Calibrating the model using training data

gwRF_adaptive <- grf(formula = resale_price ~ floor_area_sqm + remaining_lease +

x01_to_03 + x04_to_06 + x07_to_09 + x10_to_12 + x13_to_15 +

x16_to_18 + x19_to_21 + x22_to_24 + x25_to_27 + x28_to_30 +

x31_to_33 + x34_to_36 + x37_to_39 + x40_to_42 + x43_to_45 +

new_generation + improved + model_a + simplified + standard +

premium_apartment + dbss + distance_to_eldercare +

distance_to_food + distance_to_mrt + distance_to_park +

distance_to_mall + distance_to_supermarkets + nb_of_kindergartens +

nb_of_childcare + nb_of_bus_stops + nb_of_primary_schools,

dframe=rp_analysis.no_geom, bw=bw_adaptive, kernel="adaptive",

coords=coords_train)write_rds(gwRF_adaptive, "data/model/gwRF_adaptive.rds")gwRF_adaptive <- read_rds("data/model/gwRF_adaptive.rds")10.2 Predicting by using test data

10.2.1 Preparing the test data

The code chunk below will be used to combine the test data with its corresponding coordinates data.

test_data = cbind(gwRF_adaptive, coords_test)10.2.2 Predicting with test data

Next, we will use the predict function of the ranger package to predict the resale value of HDB flats using the testing data and the rf model previously calibrated.

test_pred = predict(rf, test_data,

x.var.name="X", y.var.name="Y",

local.w=1, global.w=0)10.2.3 Converting the predicting output into a data frame

The output of the predict.grf() is a vector of predicted values. It is wiser to convert it into a data frame for further visualization and analysis.

test_pred.df = as.data.frame(test_pred)In the code chunk below, cbind() is used to append the predicted values onto test_data

test_data_p <- cbind(test_data, test_pred.df)10.2.4 Calculating Root Mean Square Error

The root mean square error (RMSE) allows us to measure how far predicted values are from observed values in a regression analysis. In the code chunk below, rmse() of Metrics package is used to compute the RMSE.

rmse(test_data_p$resale_price,

test_data_p$prediction)10.2.5 Visualising the predicted values

Alternatively, scatter plot can be used to visualize the actual resale price and the predicted resale price by using the code chunk below.

ggplot(data = test_data_p,

aes(x = GRF_pred,

y = resale_price)) +

geom_point()11 Conclusion

If we have to compare the models, it seems like the random forest model is the better model with the highest explanatory power. I can only imagine that the geographically weighted random forest performs even better with great predictive ability. Models like a conventional OLS are not relevant anymore in a world where geography has such an importance in the prediction of HDB flats prices.